lol first the background is scenic mountains, then it tilts down and when it tilts back up, it’s basically mordor 😂

This is so surreal.

Like, the fact that the scene was generally continuous is absolutely crazy. If you look closely it falls apart, but most people don’t look too closely when scrolling instagram or Twitter.

But my main thing is this is how my memories “look” when I try and remember something; I don’t remember every detail neccesarily. Im having a hard time explaining it but if you extrapolate what I see when I think of my memories, I get this same, slightly smearing, not perfect recollection. Idk just rambling but this is getting a bit existential for me.

I’ve had the same thought. When it makes mistakes, it looks exactly the same as the “mistakes” in my dreams.

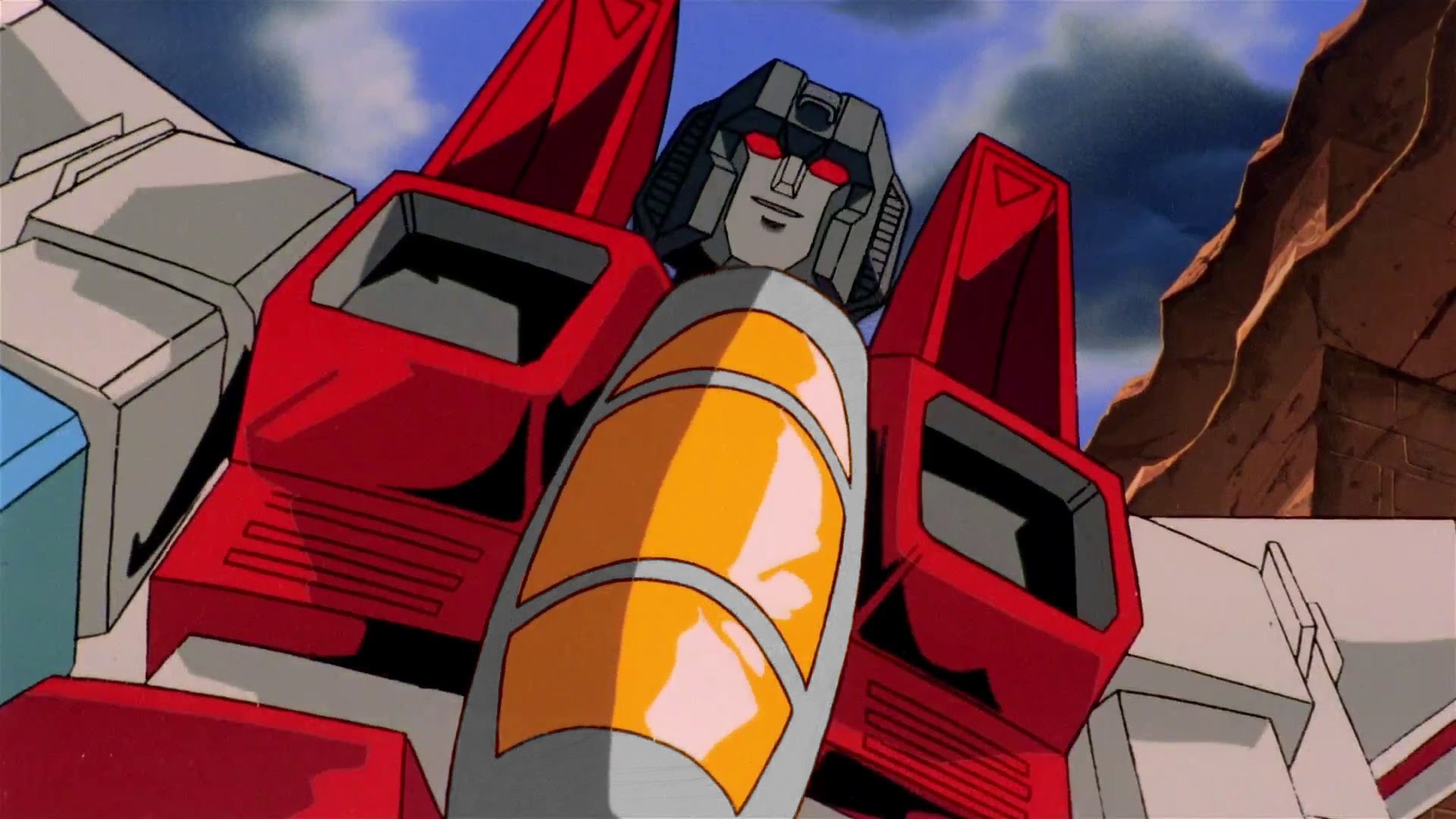

Looks like an episode of Stargate 😂

This is the worst it will ever be again.

All the little goofs people caught are the seven-fingered third hands that y’don’t really see anymore. They’ll mostly just disappear. Some could be painted out with downright sloppy work, given better tools. You want a guy right there? Blob him in. These are denoising algorithms. Ideally we’ll stop seeing much of anything based on prompts alone. You can feed in any image or video and it’ll remove all the marble that does not look like a statue. (Someone already did ‘Bad Apple but every frame is a medieval landscape.’)

We’re in the Amiga juggler demo phase of this technology. “Look, the computer can render whole animated scenes in 3D!” Cool, what do you plan to do with it? “I don’t understand the question.” It’s not gonna be long before someone pulls a Toy Story and uses this limited tech to, y’know, tell a story.

Take the SG-1 comparison. Somebody out there has a fan script, bad cosplay, and a green bedsheet, and now that’s enough to produce a mildly gloopy unauthorized episode of a twenty-year-old big-budget TV show, entirely by themselves. Just get on-camera and do each role as sincerely as you can manage, edit that into some Who Killed Captain Alex level jank, and tell the machine to unfuck it.

Cartoons will be buck-wild, thanks to interactive feedback. With enough oomph you could go from motion comics to finished “CGI” in real-time. Human artists sketch what happens, human artists catch where the machine goofs, and a supercomputer does the job of Taiwanese outsourcing on a ten-second delay. A year later it might run locally on your phone.

People are already starting to make shows with the tech, can’t wait to see it mature more as it’s basically the ability to recreate anything you can imagine, so much creativity is going to take off once concept is freed from execution.

This is truly incredible. The new future is here. This is something I didn’t predict.