- cross-posted to:

- books@lemmy.world

- cross-posted to:

- books@lemmy.world

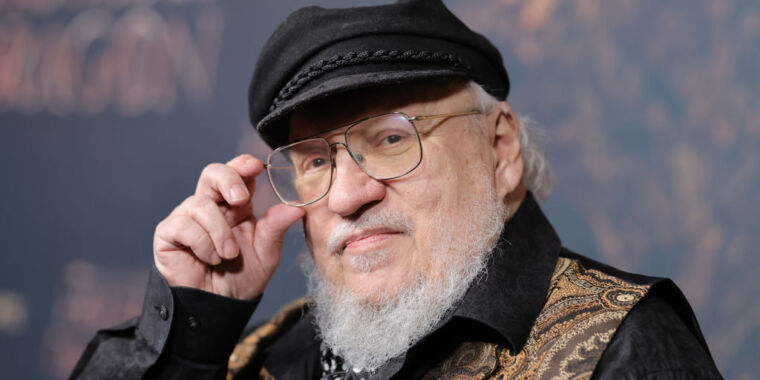

Yesterday, popular authors including John Grisham, Jonathan Franzen, George R.R. Martin, Jodi Picoult, and George Saunders joined the Authors Guild in suing OpenAI, alleging that training the company’s large language models (LLMs) used to power AI tools like ChatGPT on pirated versions of their books violates copyright laws and is “systematic theft on a mass scale.”

“Generative AI is a vast new field for Silicon Valley’s longstanding exploitation of content providers," Franzen said in a statement provided to Ars. "Authors should have the right to decide when their works are used to ‘train’ AI. If they choose to opt in, they should be appropriately compensated.”

OpenAI has previously argued against two lawsuits filed earlier this year by authors making similar claims that authors suing “misconceive the scope of copyright, failing to take into account the limitations and exceptions (including fair use) that properly leave room for innovations like the large language models now at the forefront of artificial intelligence.”

This latest complaint argued that OpenAI’s “LLMs endanger fiction writers’ ability to make a living, in that the LLMs allow anyone to generate—automatically and freely (or very cheaply)—texts that they would otherwise pay writers to create.”

Authors are also concerned that the LLMs fuel AI tools that “can spit out derivative works: material that is based on, mimics, summarizes, or paraphrases” their works, allegedly turning their works into “engines of” authors’ “own destruction” by harming the book market for them. Even worse, the complaint alleged, businesses are being built around opportunities to create allegedly derivative works:

Businesses are sprouting up to sell prompts that allow users to enter the world of an author’s books and create derivative stories within that world. For example, a business called Socialdraft offers long prompts that lead ChatGPT to engage in ‘conversations’ with popular fiction authors like Plaintiff Grisham, Plaintiff Martin, Margaret Atwood, Dan Brown, and others about their works, as well as prompts that promise to help customers ‘Craft Bestselling Books with AI.’

They claimed that OpenAI could have trained their LLMs exclusively on works in the public domain or paid authors “a reasonable licensing fee” but chose not to. Authors feel that without their copyrighted works, OpenAI “would have no commercial product with which to damage—if not usurp—the market for these professional authors’ works.”

“There is nothing fair about this,” the authors’ complaint said.

Their complaint noted that OpenAI chief executive Sam Altman claims that he shares their concerns, telling Congress that "creators deserve control over how their creations are used” and deserve to “benefit from this technology.” But, the claim adds, so far, Altman and OpenAI—which, claimants allege, “intend to earn billions of dollars” from their LLMs—have “proved unwilling to turn these words into actions.”

Saunders said that the lawsuit—which is a proposed class action estimated to include tens of thousands of authors, some of multiple works, where OpenAI could owe $150,000 per infringed work—was an “effort to nudge the tech world to make good on its frequent declarations that it is on the side of creativity.” He also said that stakes went beyond protecting authors’ works.

“Writers should be fairly compensated for their work,” Saunders said. "Fair compensation means that a person’s work is valued, plain and simple. This, in turn, tells the culture what to think of that work and the people who do it. And the work of the writer—the human imagination, struggling with reality, trying to discern virtue and responsibility within it—is essential to a functioning democracy.”

The authors’ complaint said that as more writers have reported being replaced by AI content-writing tools, more authors feel entitled to compensation from OpenAI. The Authors Guild told the court that 90 percent of authors responding to an internal survey from March 2023 “believe that writers should be compensated for the use of their work in ‘training’ AI.” On top of this, there are other threats, their complaint said, including that “ChatGPT is being used to generate low-quality ebooks, impersonating authors, and displacing human-authored books.”

Authors claimed that despite Altman’s public support for creators, OpenAI is intentionally harming creators, noting that OpenAI has admitted to training LLMs on copyrighted works and claiming that there’s evidence that OpenAI’s LLMs “ingested” their books “in their entireties.”

“Until very recently, ChatGPT could be prompted to return quotations of text from copyrighted books with a good degree of accuracy,” the complaint said. “Now, however, ChatGPT generally responds to such prompts with the statement, ‘I can’t provide verbatim excerpts from copyrighted texts.’”

To authors, this suggests that OpenAI is exercising more caution in the face of authors’ growing complaints, perhaps since authors have alleged that the LLMs were trained on pirated copies of their books. They’ve accused OpenAI of being “opaque” and refusing to discuss the sources of their LLMs’ data sets.

Authors have demanded a jury trial and asked a US district court in New York for a permanent injunction to prevent OpenAI’s alleged copyright infringement, claiming that if OpenAI’s LLMs continue to illegally leverage their works, they will lose licensing opportunities and risk being usurped in the book market.

Ars could not immediately reach OpenAI for comment. [Update: OpenAI’s spokesperson told Ars that “creative professionals around the world use ChatGPT as a part of their creative process. We respect the rights of writers and authors, and believe they should benefit from AI technology. We’re having productive conversations with many creators around the world, including the Authors Guild, and have been working cooperatively to understand and discuss their concerns about AI. We’re optimistic we will continue to find mutually beneficial ways to work together to help people utilize new technology in a rich content ecosystem.”]

Rachel Geman, a partner with Lieff Cabraser and co-counsel for the authors, said that OpenAI’s "decision to copy authors’ works, done without offering any choices or providing any compensation, threatens the role and livelihood of writers as a whole.” She told Ars that "this is in no way a case against technology. This is a case against a corporation to vindicate the important rights of writers.”

Holy cow the comments here are so alarming. OpenAI isn’t some non profit trying to better humanity. They are a for profit company making money with a product that is ILLEGALY derived from the work of others. Why are y’all so apathetic? Pretty disheartening.

Lemmy’s opinion on the topic is often very biased towards the views of tech bros rather than writers/creators, at least that’s what I’ve observed. Tech bros have a boner for LLM AIs. They don’t have anything to lose from the development of these AIs, so they don’t seem to understand the concerns of people who do.

This has been a surprise for me. I see this community as pro privacy, anti big tech, and anti capitalism. AI seems like a hot button issue at the confluence of all three, and yet comments suggest many have rose tinted glasses for tech companies with LLMs.

To pile onto that, I was recently disturbed to find I was the dissenting voice in a comment thread by saying that we should not use AI to produce generated CSAM. The top comment defended the idea.

It is impossible to generate CSAM.

That’s the entire goddamn point of calling it CSAM. To stop people mislabeling drawings as CP, when they don’t understand that Bart Simpson doesn’t have the same moral standing as a real human child.

You cannot abuse children who do not exist.

Whether you’re opposed to those generated images anyway is a separate issue.

To be fair, regardless of where you stand on the topic, there is a big difference between a drawing of a child, and a photo realistic recreation of a child that would be indistinguishable from real csam. And if ai was used to make these images in the likeness of real children, which I guarantee many people who consume that sort of stuff would do, then it very much would still be abusive material regardless of whether it’s real or not.

The difference between any made-up image and actual child abuse is insurmountable.

Copy-pasting a real face onto such an image is still not the same kind of problem as child rape.

And you’re surprised this correlates against letting authors control information for money?

The software-freedom crowd just wants OpenAI’s models published, so no single company gets to limit access to the distilled essence of all public knowledge. Being against “big tech” has never meant being against… tech. We’re not Amish. We’re open-source diehards. Sometimes that comes through as declaring a vendetta against intellectual property, as a concept. No kidding those folks aren’t lining up behind GRRM, when he declares a robot’s not allowed to learn English from his lengthy and well-known books.

My comment addresses my perception of Lemmy users, not the open source community. These two groups are not the same as is evidenced by the frequent complaints on the front page about open source gatekeeping and quantity of open source topics.

Let me add, I’m also a long time user and contributor of/to open source and free software. I think it’s not correct to assume we’re a single group that all share the same opinion. Best, cloudy1999

These two groups massively overlap, as evidenced by the quantity of open source topics to be complained about.

You don’t get to hand-wave about a group of people, based on their opinions on this subject, and then complain about someone addressing your summary on its merits.

Overlap yes, equal no. I don’t believe either of my comments included a complaint. People are entitled their opinions. My original and new remarks were only observations, no offense intended.

Suggesting this is a contradiction, a betrayal of stated ideals, is a damning insult.

Yeah, I was downvoted to hell in a copyright thread for suggesting that my work had worth and that I wasn’t just freely handing it over to the general public. Sounds like a bunch of 12 year olds that have never created a fucking thing in their life except for some artistic skidmarks in their underwear. These kids have a lot to learn about life.

Look at any discussion around Sync for Lemmy and you’ll get the same thing. Oh a developer created an app that is a flawless experience so far and looks great, and he wants 20 bucks for it? Burn him at the stake!

I’m all for FOSS and stuff but people here lean more entitled than they do “free and open”.

Yeah. I write software for a living and I use open source stuff extensively. I contribute sometimes to open source projects, but not everything can be open source or I’ll be back to flipping burgers.

Personally I write for free, simply because of the joy of it, and what I heard that Google was using people’s Google Drives is free training for their bots, I pulled everything. Not because I want to make a buck, but because Google sure as hell was going to make a buck off of my work without paying me a dime. It’s a little known as principle.

On top of this, it’s becoming increasingly clear that many tech bros have never been genuinely moved by a piece of art whether it’s visual or written so they genuinely don’t understand that AI art is devoid of any real emotional impact. AI art just throws together cliches. It reminds me of that shitty AI generated conversation between Plato and Bill Gates when were so many tech bros talking about how “inspiring” it was.

Don’t get me wrong, I love these AI tools coming out but they’re so over hyped sometimes.

The standard for all tech. At the beginning of this I remember them telling me that it was all a new age. Yeah, I’ve been through a few of those now. My favorites were driverless cars and crypto, the same bros told me the same things back then too. Truth is we’ll get a few new really cool things, society will get a bit worse for it, and then we’ll find a new shiny thing.

LLMs and AI aren’t even new, I studied about them in college. 10 years ago. It’s just that we have faster hardware that can finally support them.

This theoretical sci-fi stuff from barely a decade ago is now real enough to threaten entire industries. Yawn, am I right?

Disagreement isn’t lack of understanding. Some of us are opposed to copyright, entirely. I’m personally not. But: this is not what copyright exists to protect against. And the more it feeds on, the less it resembles any specific work.

Fuck all the replies calling people unfeeling worthless robots over this. Miserable dehumanizing hypocrites.

In another thread I saw today somebody said the best way to learn how to make an Android app was to buy chatgpt. That was their advice…

The number of people I’ve seen who think Chatgpt is some sort of authority or reliable source of information is genuinely concerning.

Exactly. It’s called “chat” for a reason. Not wikipediagpt

Personally I think the copyright system should be abolished entirely, alongside capitalism, but I am radical like that

I just find “intellectual property” copyright to be completely unethical.

I don’t exactly love the outsourcing of human creativity to AI, and personally really hope society continues to value actual human creativity, but the illegality of this stuff simply hasn’t been established.

What is clear is that directly reproducing copyrighted works is illegal. Additionally, a human taking extensive inspiration from copyrighted works is generally perfectly legal. Some of the key questions are:

Personally, I’d say that (1) is a maybe, (2) is a no under current law, and therefore (3) is a yes, and I’d love to see legislation passed clarifying how we as a society want to treat AI works. I’m strongly of the opinion that human creativity is something very special and that it should be protected, but I am concerned about a future where that isn’t valued very much in the face of an AI that knows your personal tastes exactly and can simply generate all the “content” you want in an instant.

Isn’t the point of the lawsuit to find out if it’s illegal? Until a ruling comes down, it’s pretty ambiguous.

I was on board with AI using pre-existing Works to learn off of, but this was before they started hiding their content behind subscription services. Once that happened this became unethical. It’s the difference between having a Pokemon fan game, and trying to publish your own fan mod of Pokemon sword and shield onto the market and expecting Nintendo just be cool with it.

The same people who were shilling for crypto and the nfts are now on board the LLM wagon, and they tend to be active on communities like these.

Yup, I remember listening to them talk about how blockchain was going to change the world, and the hype was exactly the same. There are things that will improve, tech will get a bit easier, but the tech will never live up to the hype. They’ll just find something new to chase after when the shine wears off here.