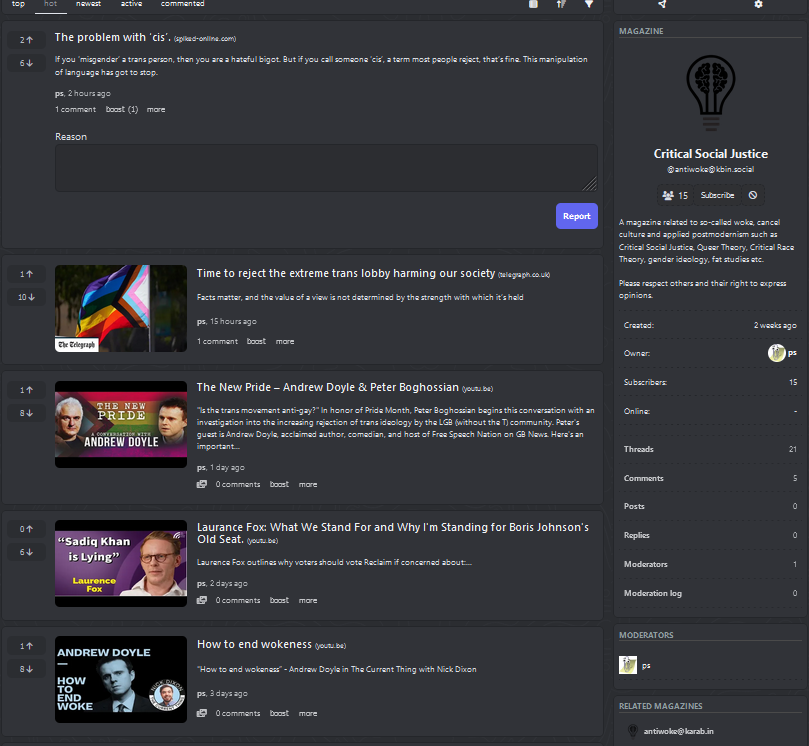

@ernest how do I report a Magazin on kbin.social ? There is a usere called “ps” who is posting to his own “antiwoke” Magazin on kbin.social. Please remove this and dont give them a chance to etablish them self on kbin.social. When I report his stuff it will go to him because he is the moderator of the magazin? Seems like a problem. Screenshot of the “antiwoke” Magazin /sub on kbin.social. 4 Headlines are visible, 2 exampels: “Time to reject the extrem trans lobby harming our society” “How to end wokeness” #Moderation #kbin #kbin.social 📎

edit: dont feed the troll, im shure ernest will delet them all when he sees this. report and move on.

Edit 2 : Ernest responded:

“I just need a little more time. There will likely be a technical break announced tomorrow or the day after tomorrow. Along with the migration to new servers, we will be introducing new moderation tools that I am currently working on and testing (I had it planned for a bit later in my roadmap). Then, I will address your reports and handle them very seriously. I try my best to delete sensitive content, but with the current workload and ongoing relocation, it takes a lot of time. I am being extra cautious now. The regulations are quite general, and I would like to refine them together with you and do everything properly. For now, please make use of the option to block the magazine/author.”

❤

I’d rather nip it in the bud. You’re just letting things fester.

I don’t disagree with the sentiment, but it will become impossible to accomplish, practically speaking, as the fediverse grows. There’s only so much that can be done with volunteers, and it’s not like armies of paid staffers work much better (as we’ve seen the major tech corps try to do).

There is a sociological aspect to this, numerous studies have confirmed the effects of highlighting bad actors. There’s a copycat effect (as studies on mass shootings show) as well as what we call the Streisand effect. Both inadvertently encourage others to perpetuate the behaviour rather than serving to limit it.

Allowing bad actors to advertise themselves is highlighting them. Banning them and deleting their communities is the opposite of highlighting them.

Exactly. We agree? Thats what I said/mean. This post doesn’t ban them, it’s inadvertently advertising their content. There have been several post like this recently. While they may mean well they likely have the opposite effect.

So your solution is to just give up and let hate fester? When has appeasement ever worked?

Not at all. I think you’re conflating what I said with someone else. I’m only suggested we don’t inadvertently promote this content by creating a front-page post denouncing it.

The point about it being impossible to accomplish is about perfection. It’s a wack-a-mole game. Since this content and people will always be there until found, it’s better to not give them more of an audience.

No site will ever perfectly remove objectionable content. It’s one reason why the upvote downvote system is so valuable for a site like this.

You can’t avoid hate and hope it recedes. You have to take it directly head on and stomp it out immediately.

If they decide to move elsewhere, then follow them there and continue rooting them out.

Just “letting people decide” is useless and will only enable them to continue.

Agreed, I think you’re still conflating things I never said. Nothing was in the “let the people decide” vein.

Thats why I think it’s better to silently remove them rather then making posts saying “look at this bad guy right there”.

I think the problem is that at the moment, the system is new enough that there’s no way to get this sort of content removed. Hence this front page post. It’s not about calling attention to the magazine, it’s about calling attention to the entire issue…

Where does this sentiment come from? Reddit for the most part already does this. Twitter before Elon showed up did this. Most modern sites already do this

The only place I can think of where this is commonplace is 4chan, because they don’t moderate.

Yes, highlighting bad actors over a course of time can be problematic. But the point in this case is the point out that we don’t have the tools to deal with said bad actor. The tools that other sites have. It’s not being said in vain, the goal is to make aware that something needs to be done so that people don’t even see the bad actor to bring attention to them.

There is a purpose to the current efforts. I think everyone understands that constantly bringing attention to them will do no good, but the goal here is to bring attention to tools that are needed, so that it doesn’t happen again, or at the very least to this extent.

You’d might be conflating my comment with someone else? I’m not against moderating. I just think it’s a bad idea to blast these communities or users onto the front page when they’re found.

No example has been able to squash out bad actors and unwanted content completely. That’s the impossible task I’m referring to. Neither volunteers, nor paid staff have accomplished this for any site. In all your example there are still areas flying under the radar.

As such, it’s better to not inadvertently fan the flames when you find the fire, don’t make their soapbox bigger. Instead put it out quietly so it doesn’t harm anyone else.

Examples are good when trying to point out a problem actually exists and not have certain people trying to tone it down and make it not seem like as big a problem as it is, despite even the devs acknowledging there’s a problem.

The final point is more tools are being worked on, the thread did do something, so trying to argue a point that would basically have prevented it just seems…poor taste.

Everything you’re talking is perception, friend. You chose to take my comment that way. The dev tools were being worked on long before this post.

As I said before, I’m not making this up, the phenomenon is studied and the effect is proven.

The biggest thing im afraid of happening to Kbin/the lemmyverse is that it will end up like Ruqqus, especially now that it seems to be swamped with trolls.

I expect that instances will get more locked down, perhaps those of us on an instance can vouch for new users who might join, but I can’t see how a volunteer admin could police a million user instance. I used to run a 10k user discussion site and while that wasn’t a fulltime job it was still a giant pain in the ass at times. If we can get in a steady state where an instance has a core of active posters and lurkers then that seems better than infinite growth.

That then surely leads to federated instances that each represent the tolerances of their admin(s) and they presumably federate or not with other instances with similar sensibilities.

In the end the nazis will get their nazi instance and federate with likeminded types - they get defederated everywhere else and wont really be a problem (maybe for the FBI). (Though I’m not certain that all internet nazis truly are, i think there a group of trolls that get their kicks from being controversial and will get no joy by being surrounded by people who accept them)

The problems are going to be in the gray areas. For example, the argument that trans people don’t deserve to exist… I find that abhorrent, but there are people who will happily say that on TV, and there are CEOs of $44B social networks that appear to agree. Some instances will tolerate that on the grounds of free speech and others will not, then the admins are left trying to decide what’s grounds for defederation.

However in my limited experience, the thing that kills projects like this is too much navel gazing. There will always be some trolling and noise, but if the remaining users expend all their energy talking about it then the whole thing collapses in on itself. I feel like this is starting to happen on reddit where lots of subs are consumed by meta, but the best thing we can do here is get out and create active communities.