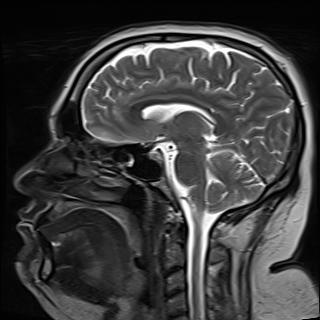

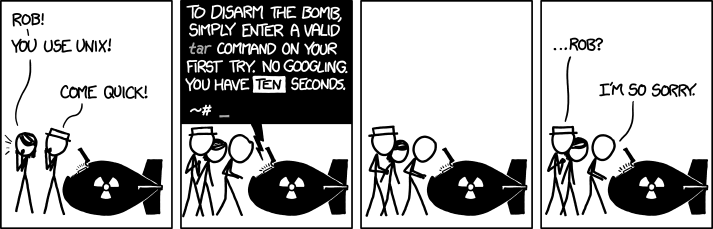

Oblig. XKCD:

tar -hEdit: wtf… It’s actually

tar -?. I’m so disappointedboom

tar eXtactZheVeckingFile

Me trying to decompress a .tar file

Joke’s on you, .tar isn’t compression

That’s not going to stop me from getting confused every time I try!

You don’t need the v, it just means verbose and lists the extracted files.

You don’t need the z, it auto detects the compression

Yeah, I just tell our Linux newbies

tar xf, as in “extract file”, and that seems to stick perfectly well.That’s still kinda new. It didn’t always do that.

Per https://www.gnu.org/software/tar/, it’s been the case since 2004, so for about 19 and a half years…

Telling someone that they are Old with saying they are old…

Something something don’t cite the old magics something something I was there when it was written…

Right, but you have no way of telling what version of tar that bomb is running

You may not, but I need it. Data anxiety is real.

tar -xzf

(read with German accent:) extract the files

Ixtrekt ze feils

German here and no shit - that is how I remember that since the first time someone made that comment

Same. Also German btw 😄

Not German but I remember the comment but not the right letters so I would have killed us all.

That’s so good I wish I needed to memorize the command

zis for gzip archives only.tar xffor eXtract the File

tar -uhhhmmmfuckfuckfuck

The Fish shell shows me just the past command with tar

So I don’t need to remember strange flags

So I don’t need to remember strange flagsI use zsh and love the fish autocomplete so I use this:

https://github.com/zsh-users/zsh-autosuggestions

Also have

fzfforctrl + rto fuzzy find previous commands.I believe it comes with oh-my-zsh, just has to be enabled in plugins and itjustworks™

man tar

you never said I can’t run a command before it.

without looking, what’s the flag to push over ssh with compression

scp

not compressed by default

That’s yet another great joke that GNU ruined.

Zip makes different tradeoffs. Its compression is basically the same as gz, but you wouldn’t know it from the file sizes.

Tar archives everything together, then compresses. The advantage is that there are more patterns available across all the files, so it can be compressed a lot more.

Zip compresses individual files, then archives. The individual files aren’t going to be compressed as much because they aren’t handling patterns between files. The advantages are that an error early in the file won’t propagate to all the other files after it, and you can read a file in the middle without decompressing everything before it.

Yeah that’s a rather important point that’s conveniently left out too often. I routinely extract individual files out of large archives. Pretty easy and quick with zip, painfully slow and inefficient with (most) tarballs.

deleted by creator

Can you evaluate the directory tree of a tar without decompressing? Not sure if gzip/bzip2 preserve that.

Nowhere in here do you cover bzip, the subject of this meme. And tar does not compress.

It’s just a different layer of compression. Better than gzip generally, but the tradeoffs are exactly the same.

Well, yes. But your original comment has inaccuracies due to those 2 points.

Obligatory shilling for unar, I love that little fucker so much

- Single command to handle uncompressing nearly all formats.

- No obscure flags to remember, just

unar <yourfile> - Makes sure output is always contained in a directory

- Correctly handles weird japanese zip files with SHIFT-JIS filename encoding, even when standard

unzipdoesn’t

gonna start lovingly referring to good software tools as “little fuckers”

Happy cake day!

cheers!

What weird Japanese zip files are you handling?

Voicebanks for Utau (free (as in beer, iirc) clone of Vocaloid) are primarily distributed as SHIFT-JIS encoded zips. For example, try downloading Yufu Sekka’s voicebank: http://sekkayufu.web.fc2.com/ . If I try to

unzipthe “full set” zip, it produces a folder called РсЙ╠ГЖГtТPУ╞Й╣ГtГЛГZГbГgБi111025Бj. But unar detects the encoding and properly extracts it as 雪歌ユフ単独音フルセット(111025). I’m sure there’s some flag you can pass tounzipto specify the encoding, but I like havingunarhandle it for me automatically.Ah, that’s pretty cool. I’m not sure I know of that program. I do know a little vocaloid though, but I only really listen to 稲葉曇(Inabakumori).

I know inabakumori! Their music is so cool! When I first listened to rainy boots and lagtrain, it made me feel emotions I thought I had forgotten a long time ago… I wish my japanese was good enough to understand the lyrics without looking them up ._. I’m also a huge fan of Kikuo. His music is just something completely unique, not to mention his insane tuning. He makes Miku sing in ways I didn’t think were possible lol

I get you, I want to learn more Japanese. I only understand a very small amount at this point. I don’t have any Miku songs that I have really wanted to listen to, but that could change. I might check out Kikuo then. Also I love the animations Inabakumori release with their songs too. They have some new stuff that’s really good if you haven’t checked it out yet.

The same thing with zip, just use “unzip <file>” right?

I’m an

atools kinda personLooks cool, I’ll check it out

Where .7z at

On windows.

7z is available for Linux as well (CLI only)

It is open-source too.

I know, but I’d say ppl on Linux tend to not use it.

.7z gang, represent

When I was on windows I just used 7zip for everything. Multi core decompress is so much better than Microsoft’s slow single core nonsense from the 90s.

Small

dickpackage kings/queens rise up.

Yeah, 7z is the clear winner.

It’s much slower to decompress than DEFLATE ZIP though

.tar.xz

xz is quite slow though

There’s several levels you can use to trade off additional space for requiring more processing power. That being said, I hate xz and it still feels slow AF every time I use it.

It starting 0.5 seconds slower than usual saved us all a bit of a headache as it turns out.

I hate 7z, it’s slow (at least for me) and for some reason I often have problems with these files

tar c file | pxz > file.tar.xz

pixz is in “extra” repo in arch. Same as pigz.

Same algo as in 7z

I had no idea about that!

Yeah, it’s similar enough to tar.gz to always confuse me.

wait until you learn about

.tar.lzTar lzma nuts, amirite?

Good for image backups, after zeroing empty space.

TAR LAZER!

cool people use zst

You can’t decrease something by more than 100% without going negative. I’m assuming this doesn’t actually decompress files before you tell it to.

Does this actually decompress in 1/13th the time?

Yeah, Facebook!

Sucks but yes that tool is damn awesome.

Meta also works with CentOS Stream at their Hyperscale variant.

Makes sense. There are actual programmers working at facebook. Programmers want good tools and functionality. They also just want to make good/cool/fun products. I mean, check out this interview with a programmer from pornhub. The poor dude still has to use jquery, but is passionate to make the best product they can, like everone in programming.

Right, I usually do that or lz4.

When I’m feeling cool and downloading a

*.tar*file, I’llwgetto stdout, and tar from stdin. Archive gets extracted on the fly.I have (successfully!) written an

.isoto CD this way, too (pipe wget to cdrecord). Fun stuff.Something like

wget avc.com | tar xvf?Almost, I think.

wget -O - http://example.com/archive.tar | tar -xvf -Didnt think this would ever work

This is what we call UNIX-way

TAr stands for Tape Archive. Tapes store data sequentially. Downloads are done sequentially.

It’s really just like a far away tape drive.

I usually suppress output of either wget (-q) or of tar (no v flag), otherwise I think the output gets mangled and looks funny (you see both download progress and files being extracted).

.tar.gz, or.tgzif I’m in a hurry…or shipping to MSDOS

Where’s .7z people?

deleted by creator

Can someone explain why MacOS always seems to create _MACOSX folders in zips that we Linux/Windows users always delete anyway?

Window adds desktop.ini randomly too

Linux adds .demon_portal files all over my computer too.

That’s not Linux doing that. It’s the demons in your hardware trying to escape. They normally don’t cause too many issues luckily, but if you don’t close the portals occasionally they can take over your system.

Yeah, those tend to be pre-folder settings for the File Explorer.

Like View options, thumbnails and such.It’s been a while for me, but I think there was something specially for thumbnails too. You might find one if you go into the folder options and set a folder to optimized for pictures/videos and add some to it.

You’re thinking of the

thumbs.dbfiles: https://en.wikipedia.org/wiki/Windows_thumbnail_cache#Thumbs.db

Huh, never noticed that. Probably always thought that was just part of the program/files needed.

this is a complete uneducated guess from a relatively tech-illiterate guy, but could it contain mac-specific information about weird non-essential stuff like folder backgrounds and item placement on the no-grid view?

Correct. It contains filesystem metadata that’s not supported in the zip files “filesystem”.

Interesting. We have that in Linux too just not for every directory.

They’re Metadata specific for Macs.

If you download a third party compression tool they’ll probably have an option somewhere to exclude these from the zips but the default tool doesn’t Afaik.Thanks! Hmm, never thought of looking at 7zip’s settings to see if it can autodelete/not unpack that stuff. I’ll see if I can find such a setting!

You can definitely check, but I would expect the option to exist when the archive is created rather than when it’s extracted

“Resource forks” IIRC, old stuff. Same for the .DS_Store file.

For just $12.99 you can disable this https://apps.apple.com/us/app/blueharvest/id739483376

Because Apple always gotta fuck with and “innovate” perfectly working shit

Windows’s built-in tool can make zips without fucking with shit AND the resulting zip works just fine across systems.

Mac though…Mac produced zips always ALWAYS give me issues when trying to unzip on a non-mac (ESPECIALLY Linux)

HFS+ has a different features set than NTFS or ext4, Apple elect to store metadata that way.

I would imagine modern FS like ZFS or btrfs could benefit from doing something similar but nobody has chosen to implement something like that in that way.

Yeah totally!

frantically searches for the meaning of all those abbreviations

I gotcha:

- Btrfs

- BTree File System

- A Copy on White file system that supports snapshots, supported mostly by

- BTree File System

- ZFS

- Zetabyte File System

- Copy on Write File System. Less flexible than BTRFS but generally more robust and stable. Better compression in my experience than BTRFS. Out of Kernel Linux support and native FreeBSD.

- Zetabyte File System

- HFS+

- what Mac uses, I have no clue about this. some Copy on Write stuff.

- NTFS

- Windows File System

- From what I know, no compression or COW

- In my experience less stable than ext4/ZFS but maybe it’s better nowadays.

Great summary, but I’ve to add that NTFS is WAY more stable than ext4 when it comes to hardware glitches and/or power failures. ZFS is obviously superior to both but overkill for most people, BTRFS should be a nice middle ground and now even NAS manufacturers like Synology are migrating ext4 into BTRFS.

Well that’s good to know because I had some terrible luck with it about a decade ago. Although I don’t think I would go back to windows, I just don’t need it for work anymore and it’s become far too complex.

I’ve also had pretty bad luck with BTRFS though, although it seems to have improved a lot in the past 3 years that I’ve been using it.

ZFS would be good but having to rebuild the kernel module is a pain in the ass because when it fails to build you’re unbootable (on root). I also don’t like how clones are dependant on parents, requires a lot of forethought when you’re trying to create a reproducible build on eg Gentoo.

Thanks!

- Btrfs

MacOS has two files per file, so the extras need to be stored somewhere.

.fitgirlrepack

I use .tar.gz in personal backups because it’s built in, and because its the command I could get custom subdirectory exclusion to work on.

I’m the weird one in the room. I’ve been using 7z for the last 10-15 years and now

.tar.zst, after finding out that ZStandard achieves higher compression than 7-Zip, even with 7-Zip in “best” mode, LZMA version 1, huge dictionary sizes and whatnot.zstd --ultra -M99000 -22 files.tar -o files.tar.zstYou can actually use Zstandard as your codec for 7z to get the benefits of better compression and a modern archive format! Downside is it’s not a default codec so when someone else tries to open it they may be confused by it not working.

That is an interesting implementation of security through obscurity…

How does one enable this on the standard 7Zip client?

On Windows, it’s easy! Unfortunately, on Linux, as far as I know, you currently have to use a non-standard client.

Cool, THANKS!! 😊 🙏

.tar.7z gang (probably not a good idea)

.tar.xz is. Or .tar.lzip.

.tar.7z.zip.bz2 gang unite

Mf’ers act like they forgot about zstandatd