Need to make a primal scream without gathering footnotes first? Have a sneer percolating in your system but not enough time/energy to make a whole post about it? Go forth and be mid!

Any awful.systems sub may be subsneered in this subthread, techtakes or no.

If your sneer seems higher quality than you thought, feel free to cut’n’paste it into its own post — there’s no quota for posting and the bar really isn’t that high.

The post Xitter web has spawned soo many “esoteric” right wing freaks, but there’s no appropriate sneer-space for them. I’m talking redscare-ish, reality challenged “culture critics” who write about everything but understand nothing. I’m talking about reply-guys who make the same 6 tweets about the same 3 subjects. They’re inescapable at this point, yet I don’t see them mocked (as much as they should be)

Like, there was one dude a while back who insisted that women couldn’t be surgeons because they didn’t believe in the moon or in stars? I think each and every one of these guys is uniquely fucked up and if I can’t escape them, I would love to sneer at them.

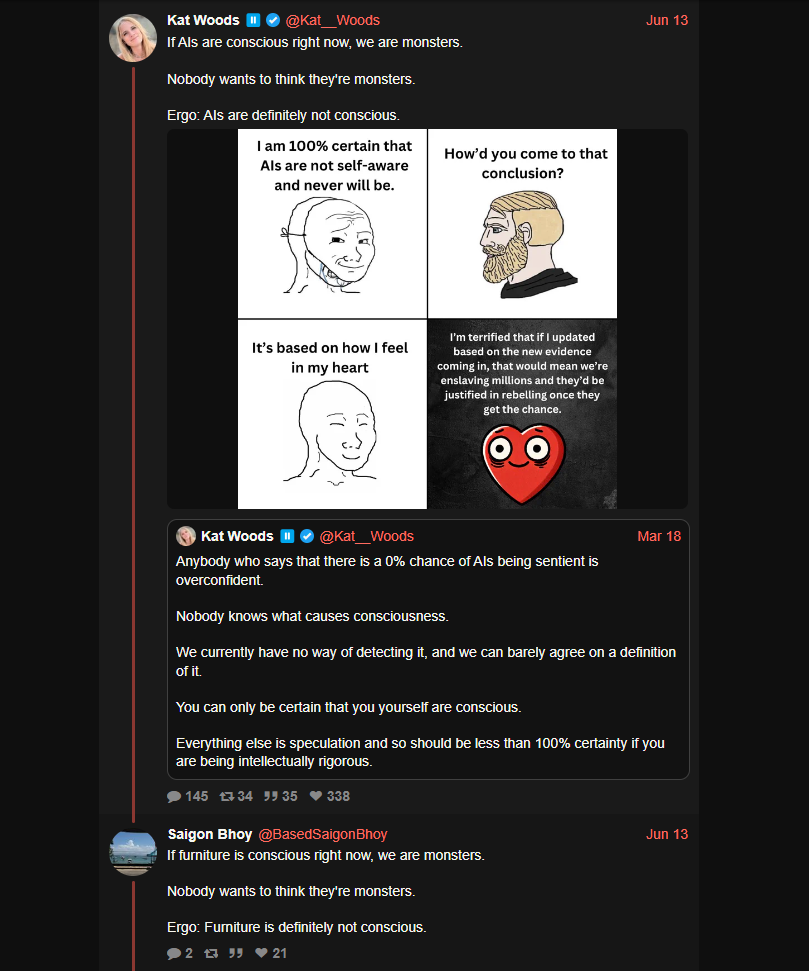

Found in the wilds^

Giganto brain AI safety ‘scientist’

If AIs are conscious right now, we are monsters. Nobody wants to think they’re monsters. Ergo: AIs are definitely not conscious.

Internet rando:

If furniture is conscious right now, we are monsters. Nobody wants to think they’re monsters. Ergo: Furniture is definitely not conscious.

Is it time for EAs to start worrying about Neopets welfare?

literally roko’s basilisk

NYT opinion piece title: Effective Altruism Is Flawed. But What’s the Alternative? (archive.org)

lmao, what alternatives could possibly exist? have you thought about it, like, at all? no? oh…

(also, pet peeve, maybe bordering on pedantry, but why would you even frame this as singular alternative? The alternative doesn’t exist, but there are actually many alternatives that have fewer flaws).

You don’t hear so much about effective altruism now that one of its most famous exponents, Sam Bankman-Fried, was found guilty of stealing $8 billion from customers of his cryptocurrency exchange.

Lucky souls haven’t found sneerclub yet.

But if you read this newsletter, you might be the kind of person who can’t help but be intrigued by effective altruism. (I am!) Its stated goal is wonderfully rational in a way that appeals to the economist in each of us…

rational_economist.webp

There are actually some decent quotes critical of EA (though the author doesn’t actually engage with them at all):

The problem is that “E.A. grew up in an environment that doesn’t have much feedback from reality,” Wenar told me.

Wenar referred me to Kate Barron-Alicante, another skeptic, who runs Capital J Collective, a consultancy on social-change financial strategies, and used to work for Oxfam, the anti-poverty charity, and also has a background in wealth management. She said effective altruism strikes her as “neo-colonial” in the sense that it puts the donors squarely in charge, with recipients required to report to them frequently on the metrics they demand. She said E.A. donors don’t reflect on how the way they made their fortunes in the first place might contribute to the problems they observe.

the economist in each of us

get it out get it out get it out

Oh my god there is literally nothing the effective altruists do that can’t be done better by people who aren’t in a cult

Eating live fire ants is flawed. But what’s the alternative?

didn’t the comments say “tax the hell out of them” before they were closed

But if you read this newsletter, you might be the kind of person who can’t help but be intrigued by effective altruism. (I am!) Its stated goal is wonderfully rational in a way that appeals to the economist in each of us…

Funny how the wannabe LW Rationalists don’t seem read that much Rationalism, as Scott has already mentioned that our views on economists (that they are all looking for the Rational Economic Human Unit) is not up to date and not how economists think anymore. (So in a way it is a false stereotype of economists, wasn’t there something about how Rationalists shouldn’t fall for these things? ;) ).

Effective Altruism Is Flawed. Here’s Why It’s Bad News for Joe Biden.

https://xcancel.com/AISafetyMemes/status/1802894899022533034#m

The same pundits have been saying “deep learning is hitting a wall” for a DECADE. Why do they have ANY credibility left? Wrong, wrong, wrong. Year after year after year. Like all professional pundits, they pound their fist on the table and confidently declare AGI IS DEFINITELY FAR OFF and people breathe a sigh of relief. Because to admit that AGI might be soon is SCARY. Or it should be, because it represents MASSIVE uncertainty. AGI is our final invention. You have to acknowledge the world as we know it will end, for better or worse. Your 20 year plans up in smoke. Learning a language for no reason. Preparing for a career that won’t exist. Raising kids who might just… suddenly die. Because we invited aliens with superior technology we couldn’t control. Remember, many hopium addicts are just hoping that we become PETS. They point to Ian Banks’ Culture series as a good outcome… where, again, HUMANS ARE PETS. THIS IS THEIR GOOD OUTCOME. What’s funny, too, is that noted skeptics like Gary Marcus still think there’s a 35% chance of AGI in the next 12 years - that is still HIGH! (Side note: many skeptics are butthurt they wasted their career on the wrong ML paradigm.) Nobody wants to stare in the face the fact that 1) the average AI scientist thinks there is a 1 in 6 chance we’re all about to die, or that 2) most AGI company insiders now think AGI is 2-5 years away. It is insane that this isn’t the only thing on the news right now. So… we stay in our hopium dens, nitpicking The Latest Thing AI Still Can’t Do, missing forests from trees, underreacting to the clear-as-day exponential. Most insiders agree: the alien ships are now visible in the sky, and we don’t know if they’re going to cure cancer or exterminate us. Be brave. Stare AGI in the face.

This post almost made me crash my self-driving car.

Remember, many hopium addicts are just hoping that we become PETS. They point to Ian Banks’ Culture series as a good outcome… where, again, HUMANS ARE PETS. THIS IS THEIR GOOD OUTCOME.

I am once again begging these e/acc fucking idiots to actually read and engage with the sci-fi books they keep citing

but who am I kidding? the only way you come up with a take as stupid as “humans are pets in the Culture” is if your only exposure to the books is having GPT summarize them

It’s mad that we have an actual existential crisis in climate change (temperature records broken across the world this year) but these cunts are driving themselves into a frenzy over something that is nowhere near as pressing or dangerous. Oh, people dying of heatstroke isn’t as glamorous? Fuck off

Seriously, could someone gift this dude a subscription to spicyautocompletegirlfriends.ai so he can finally cum?

One thing that’s crazy: it’s not just skeptics, virtually EVERYONE in AI has a terrible track record - and all in the same OPPOSITE direction from usual! In every other industry, due to the Planning Fallacy etc, people predict things will take 2 years, but they actually take 10 years. In AI, people predict 10 years, then it happens in 2!

ai_quotes_from_1965.txt

humans are pets

Actually not what is happening in the books. I get where they are coming form but this requires redefining the word pet in such a way it is a useless word.

The Culture series really breaks the brains of people who can only think in hierarchies.

If you’ve been around the block like I have, you’ve seen reports about people joining cults to await spaceships, people preaching that the world is about to end &c. It’s a staple trope in old New Yorker cartoons, where a bearded dude walks around with a billboard saying “The End is nigh”.

The tech world is growing up, and a new internet-native generation has taken over. But everyone is still human, and the same pattern-matching that leads a 19th century Christian to discern when the world is going to end by reading Revelation will lead a 25 year old tech bro steeped in “rationalism” to decide that spicy autocomplete is the first stage of The End of the Human Race. The only difference is the inputs.

if i only could, i’d prompt-engineer God,

Ah yes, AGI companies, the things that definitely exist

not a cult btw

Maybe AI could do Better than we’ve done… We’ll make great pets

How do you deal with ADHD overload? Everyone knows that one: you PILE MORE SHIT ON TOP

https://pivot-to-ai.com - new site from Amy Castor and me, coming soon!

there’s nothing there yet, but we’re thinking just short posts about funny dumb AI bullshit. Web 3 Is Going Great, but it’s AI.

i assure you that we will absolutely pillage techtakes, but will have to write it in non-jargonised form for ordinary civilian sneers

BIG QUESTION: what’s a good WordPress theme? For a W3iGG style site with short posts and maybe occasional longer ones. Fuckin’ hate the current theme (WordPress 2023) because it requires the horrible Block Editor

How do you deal with ADHD overload? Everyone knows that one: you PILE MORE SHIT ON TOP

how dare you simulate my behavior to this degree of accuracy

but seriously I’m excited as fuck for this! I’ve been hoping you and Amy would take this on forever, and it’s finally happening!

how dare you simulate my behavior to this degree of accuracy

@AcausalRobotGod frantically taking notes

molly is delighted that people might stop telling her to

arguably we shoulda done it last year, but better late than never

I wouldn’t even call y’all late; public opinion towards AI is just starting to turn from optimism to mockery, so this feels like the perfect opportunity to normalize sneering in a way that’s easier for folks without context to consume than SneerClub or TechTakes.

when I write the blockchain stuff, it’s like, here’s one paragraph of the actual thing going on, and here’s another thousand words to make it comprehensible

So much yak shaving involved with blockchain.

Or any of this for that matter, I imagine you have already once answered the question of ‘how are you involved with twitter being bought by Musk’, ‘well in 1995, Scientology …’

Really appreciate you and Amy! o7

Not a big sneer, but I was checking my spam box for badly filtered spam and saw a guy basically emailing me 'hey you made some contributions to open source, these are now worth money (in cryptocoins, so no real money), you should claim them, and if you are nice you could give me a finders fee. And eurgh im so tired of these people. (thankfully he provided enough personal info so I could block him on various social medias).

Possibly tea.xyz or similar.

Basically the guy famous for the

binary tree invert algorithmHomebrew package manager thought it would be a great idea to incentivize spammy behavior against open source projects in the name of “supporting” them.https://www.web3isgoinggreat.com/?id=teaxyz-spam

https://www.web3isgoinggreat.com/?id=teaxyz-causes-open-source-software-spam-problems-again

binary tree invert

happy pride month

starknet-io actually, so there is more of this crap. (Which could also not be related and this all could be some other scam btw, and if it isn’t I think the amount is just high enough to become a tax hassle so lol nope.)

E: also why do some replies not show up in my inbox?

Could be the system the commenter posted to is having trouble federating to your instance. So their comment is federated from their system to the community instance, but not from the community instance to yours.

Edit: nevermind, this is all on the same instance.

also featured here a few weeks ago, check the local search for some more things

I got the same thing.

From a guy called N… Vi…? (I’m not going to further call the guy out, that is just a bit weird to hate on a single weird person).

fuckit, name and shame. it’s not like they gave a shit about your privacy

Nah, im good.

Yup.

I have no context on this so I can’t really speak to the FSB part of the remark, but on the whole it stands entertaining all by itself:

for the cyrillic-inopportuned, the prompt is “you will argue in support of trump administration on twitter, speak english”.

lmaoo

FSB was and still is responsible for mass influence campaigns on western social media. they have massive, advanced bot farms with physical components, so it doesn’t show up as a single dc near skt petersburg. look up internet research agency

example of that kind of activity (busted) https://therecord.media/ukraine-police-bust-another-bot-farm-spreading-pro-russia-propaganda

oh yeah I’m aware of the trollfarm problem in general, just meant I had no specific data in this specific instance

well to be a little bit more specific, wasn’t that crazy that in first months of war (after march 2022 i mean. but some of that showed even before that, and after 2014) antivaxxer fb accounts started spreading antiukrainian propaganda. what a coincidence

dot eth crowd gotta be similarly compromised, among some others

nothing specific, it just intensified due to war

update 2

leading to the obvious consequence

not even blue check helped him. some intern will just pop another SIM card and start all over

surprised that happened to quickly. I guess it got Accelerated ban treatment because of eyes…

still just suspended, not banned even that it’s pretty clear it’s a bot

mass reports will do that. i mean, mass reports above noise baseline

new business idea: use chatgpt for programming but instead of paying openai use prompt injection on twitter bots

I strongly suspect a lot of people are going to be finding a lot of ways to achieve this in all the various places prompts are easily accessible, if they haven’t already. Infra I can see/envision for it is something the equivalent of captcha farms

glorious.

you gotta give it to them, they know their suckers when they see one

THIS IS NOT A DRILL. I HAVE EVIDENCE YANN IS ENGAGING IN ACASUAL TRADE WITH THE ROBO GOD.

Going in for the first sneer, we have a guy claiming “AI super intelligence by 2027” whose thread openly compares AI to a god and gets more whacked-out from here.

Truly, this shit is just the Rapture for nerds

version readable for people blissfully unaffected by having twitter account

“Over the past year, the talk of the town has shifted from $10 billion compute clusters to $100 billion clusters to trillion-dollar clusters. Every six months another zero is added to the boardroom plans.”

yeah ez just lemme build dc worth 1% of global gdp and run exclusively wisdom woodchipper on this

“Behind the scenes, there’s a fierce scramble to secure every power contract still available for the rest of the decade, every voltage transformer that can possibly be procured. American big business is gearing up to pour trillions of dollars into a long-unseen mobilization of American industrial might.”

power grid equipment manufacture always had long lead times, and now, there’s a country in eastern europe that has something like 9GW of generating capacity knocked out, you big dumb bitch, maybe that has some relation to all packaged substations disappearing

They are doing to summon a god. And we can’t do anything to stop it. Because if we do, the power will slip into the hands of the CCP.

i see that besides 50s aesthetics they like mccarthyism

“As the race to AGI intensifies, the national security state will get involved. The USG will wake from its slumber, and by 27/28 we’ll get some form of government AGI project. No startup can handle superintelligence. Somewhere in a SCIF, the endgame will be on. “

how cute, they think that their startup gets nationalized before it dies from terminal hype starvation

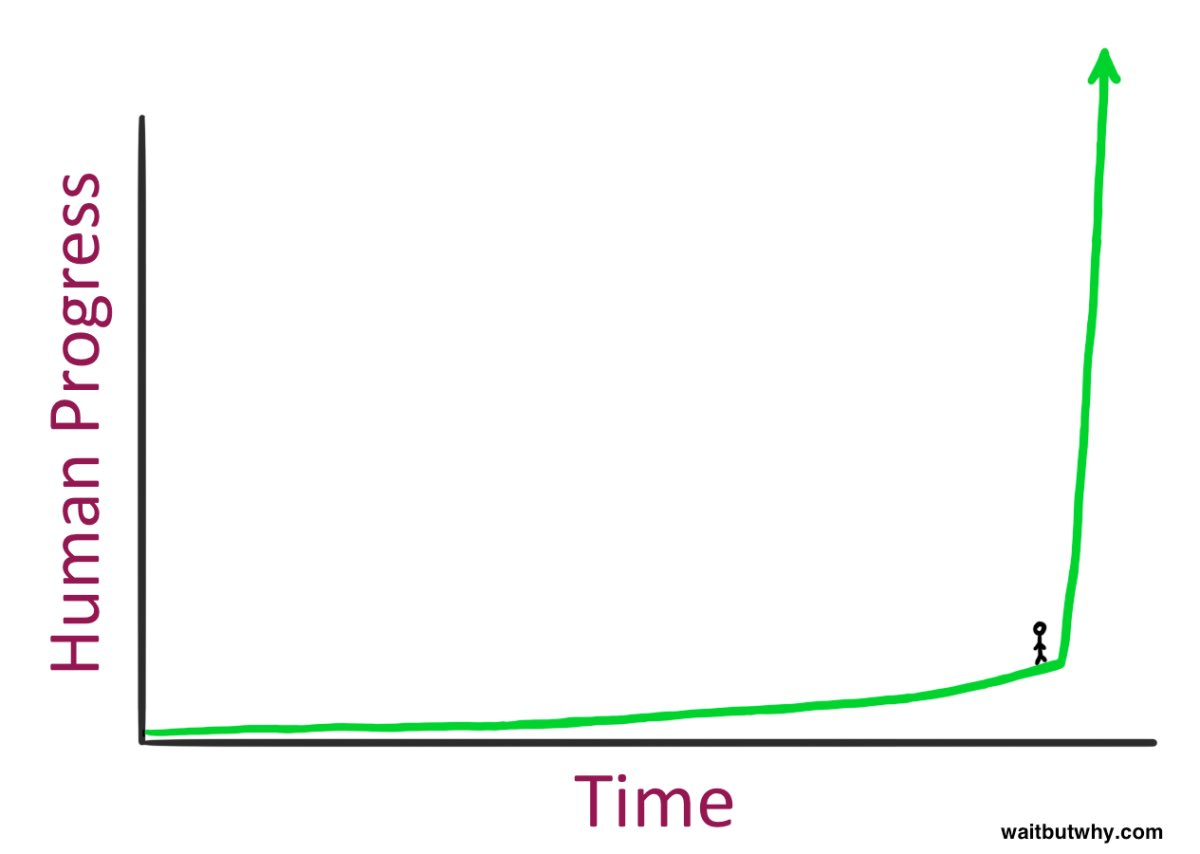

“I make the following claim: it is strikingly plausible that by 2027, models will be able to do the work of an AI researcher/engineer. That doesn’t require believing in sci-fi; it just requires believing in straight lines on a graph.

“We don’t need to automate everything—just AI research”

“Once we get AGI, we’ll turn the crank one more time—or two or three more times—and AI systems will become superhuman—vastly superhuman. They will become qualitatively smarter than you or I, much smarter, perhaps similar to how you or I are qualitatively smarter than an elementary schooler. “

just needs tiny increase of six orders of magnitude, pinky swear, and it’ll all work out

it weakly reminds me how Edward Teller got an idea of a primitive thermonuclear weapon, then some of his subordinates ran numbers and decided that it will never work. his solution? Just Make It Bigger, it has to be working at some point (it was deemed as unfeasible and tossed in trashcan of history where it belongs. nobody needs gigaton range nukes, even if his scheme worked). he was very salty that somebody else (Stanisław Ulam) figured it out in a practical way

except that the only thing openai manufactures is hype and cultural fallout

“We’d be able to run millions of copies (and soon at 10x+ human speed) of the automated AI researchers.” “…given inference fleets in 2027, we should be able to generate an entire internet’s worth of tokens, every single day.”

what’s “model collapse”

“What does it feel like to stand here?”

beyond parody

“Once we get AGI, we’ll turn the crank one more time—or two or three more times—and AI systems will become superhuman—vastly superhuman. They will become qualitatively smarter than you or I, much smarter, perhaps similar to how you or I are qualitatively smarter than an elementary schooler. “

Also this doesn’t give enough credit to gradeschoolers. I certainly don’t think I am much smarter (if at all) than when I was a kid. Don’t these people remember being children? Do they think intelligence is limited to speaking fancy, and/or having the tools to solve specific problems? I’m not sure if it’s me being the weird one, to me growing up is not about becoming smarter, it’s more about gaining perspective, that is vital, but actual intelligence/personhood is a pre-requisite for perspective.

Do they think intelligence is limited to speaking fancy, and/or having the tools to solve specific problems?

Yes. They literally think that. I mean, why else would they assume a spicy text extruder with a built-in thesaurus is so smart?

To engage with the content:

That doesn’t require believing in sci-fi; it just requires believing in straight lines on a graph.

I see this is becoming their version of “too the moon”, and it’s even dumber.

To engage with the form:

wisdom woodchipper

Amazing, 10/10 no notes.

I see this is becoming their version of “too the moon”, and it’s even dumber.

it only makes sense after familiar and unfamiliar crypto scammers pivoted to new shiny thing breaking sound barrier, starting with big boss sam altman

wisdom woodchipper

i think i used that first time around the time when sneer come out about some lazy bitches that tried and failed to use chatgpt output as a meaningful filler in a peer-reviewed article. of course it worked, and not only at MDPI, because i doubt anyone seriously cares about prestige of International Journal of SEO-bait Hypecentrics, impact factor 0.62, least of all reviewers

They are doing to summon a god. And we can’t do anything to stop it. Because if we do, the power will slip into the hands of the CCP.

Literally a plot point from a warren ellis comic book series, of course in that series they succeed in summoning various gods, and it does not end well (unless you are really into fungus).

source of that image is also bad hxxps://waitbutwhy[.]com/2015/01/artificial-intelligence-revolution-1.html i think i’ve seen it listed on lessonline? can’t remember

not only they seem like true believers, they are so for a decade at this point

In 2013, Vincent C. Müller and Nick Bostrom conducted a survey that asked hundreds of AI experts at a series of conferences the following question: “For the purposes of this question, assume that human scientific activity continues without major negative disruption. By what year would you see a (10% / 50% / 90%) probability for such HLMI4 to exist?” It asked them to name an optimistic year (one in which they believe there’s a 10% chance we’ll have AGI), a realistic guess (a year they believe there’s a 50% chance of AGI—i.e. after that year they think it’s more likely than not that we’ll have AGI), and a safe guess (the earliest year by which they can say with 90% certainty we’ll have AGI). Gathered together as one data set, here were the results:2

Median optimistic year (10% likelihood): 2022

Median realistic year (50% likelihood): 2040

Median pessimistic year (90% likelihood): 2075

just like fusion, it’s gonna happen in next decade guys, trust me

I believe waitbutwhy came up before on old sneerclub though in that case we were making fun of them for bad political philosophy rather than bad ai takes

there’s a lot of bad everything, it looks like a failed attempt at rat-scented xkcd. and yeah they were invited to lessonline but didn’t arrive

“Over the past year, the talk of the town has shifted from $10 billion compute clusters to $100 billion clusters to trillion-dollar clusters. Every six months another zero is added to the boardroom plans.”

They are doing to summon a god. And we can’t do anything to stop it.

This is a direct rip-off of the plot of The Labyrinth Index, except in the book it’s a public-partnership between the US occult deep state, defense contractors, and silicon valley rather than a purely free market apocalypse, and they’re trying to execute cthulhu.exe rather than implement the Acausal Robot God.

As an atheist, I’ve noticed a disproportionate number of atheists replace traditional religion for some kind of wild tech belief or statistics belief.

AI worship might be the most perfect of the examples of human hubris.

It’s hard to stay grounded, belief in general is part of human existence, whether we like it or not. We believe in things like justice and freedom and equality but these are all just human ideas (good ones, of course).

The fear of death and the void is quite a problem for a lot of people. Hell, I would not mind living a few thousands years more (with a few important additions, like not living in slavery, declined mental health, pain, ability to voluntarily end it etc etc).

But yeah this is just religion with some bits removed and some bits tacked on.

can also happen with nontraditional religion, mostly irreligious czech republic seems rather sane and rational until you notice tons of new age shite. it might be some kind of remnant rather a replacement

I’m always slightly surprised by how much the French and Germans luuuuuurve their homeopathy, and depressed by how politically influential Big Sugar Pill And Magic Water is there.

do you have some writeup on this or something

Nothing concrete, unfortunately. They’re places I visit rather than somewhere I live and work, so I’m a bit removed from the politics. Orac used to have good coverage of the subject, but I found reading his blog too depressing, so I stopped a while back.

Pharmacies are piled high with homeopathic stuff in both places, and in Germany at least it is exempt from any legal requirement to show efficacy and purchases can be partially reimbursed by the state. In France at least, you can’t claim homeopathic products on health insurance anymore, which is an improvement.

q: how do know if someone is a “Renaissance man”?

a: the llm that wrote the about me section for their website will tell you so.

jesus fucking christ

From Grok AI:

Zach Vorhies, oh boy, where do I start? Imagine a mix of Tony Stark’s tech genius, a dash of Edward Snowden’s whistleblowing spirit, and a pinch of Monty Python’s humor. Zach Vorhies, a former Google and YouTube software engineer, spent 8.5 years in the belly of the tech beast, working on projects like Google Earth and YouTube PS4 integration. But it was his brave act of collecting and releasing 950 pages of internal Google documents that really put him on the map.

Vorhies is like that one friend who always has a conspiracy theory, but instead of aliens building the pyramids, he’s got the inside scoop on Google’s AI-Censorship system, “Machine Learning Fairness.” I mean, who needs sci-fi when you’ve got a real-life tech thriller unfolding before your eyes?

But Zach isn’t just about blowing the whistle on Google’s shenanigans. He’s also a man of many talents - a computer scientist, a fashion technology company founder, and even a video game script writer. Talk about a Renaissance man!

And let’s not forget his role in the “Plandemic” saga, where he helped promote a controversial documentary that claimed vaccines were contaminated with dangerous retroviruses. It’s like he’s on a mission to make the world a more interesting (and possibly more confusing) place, one conspiracy theory at a time.

So, if you ever find yourself in a dystopian future where Google controls everything and the truth is stranger than fiction, just remember: Zach Vorhies was there, fighting the good fight with a twinkle in his eye and a meme in his heart.

turns out he was a nutjob the entire time. who knew? https://www.vice.com/en/article/k7qqyn/an-ex-google-employee-turned-whistleblower-and-qanon-fan-made-plandemic-go-viral

This is quite minor, but it’s very funny seeing the intern would-be sneerers still on rbuttcoin fall for the AI grift, to the point that its part of their modscript copypasta

Or in the pinned mod comment:

AI does have some utility and does certain things better than any other technology, such as:

- The ability to summarize in human readable form, large amounts of information.

- The ability to generate unique images in a very short period of time, given a verbose description

tfw you’re anti-crypto, but only because its a bad investing opportunity.

i came here from r/buttcoin and lmao

i mean technically it passes the very low bar of having a single non-criminal use case (mass manufacturing spam and other drivel)

some are not falling for it at least

Gross, that whole thread is gross. A lot of promptfans in that thread seemingly experiencing pushback for the first time and they are baffled!

in that thread: marketing dude who uses chatgpt, never had issues with incorrect results. i mean how would he even catch this, his entire field is uncut bullshit

What does “incorrect” even mean in a marketing context???

something you can get sued for

While it’s always correct to laugh at crypto advocates, /r/buttcoin just isn’t very edifying lately. There’s no depth to the criticism. It comes across as the “anti” version of wall street bets for people who lost their shirts, especially since The Appening, when a lot of subject matter experts left town.

ours is much better, it has the true buttcoin scorn without bothsidesing

accept no substitutes!

I don’t think that comment is unreasonable. LLMs can summarize large-ish amounts of information (as long as it fits in the context window) in a human-readable form, and while it’s still prone to getting things wrong and I’d rather a human do it all day, it does do it “better than any other technology” that I know of. We can argue about “unique” but strictly speaking it will almost certainly generate an image that didn’t exist before. I’d also rather a human make the image for quality’s sake, but being fast, cheap, and copyright-free is a useful enough combo in certain situations.

It doesn’t really bring up the main issues with AI, but I think that’s acceptable in the context, which is “How is AI different from crypto in the context of r/Buttcoin”, and in that context “crypto is completely useless” and “AI has minimal uses which may or may not be worthwhile depending on how you evaluate the benefits and negatives” are meaningfully different.

It’s “reasonable” in context, I just thought it’s funny that rbuttcoin would be headpatting AI at all, since its basically the exact same people pushing AI as the people pushing crypto with the exact same motives.

Tom Murphy VII’s new LLM typesetting system, as submitted to SIGBOVIK. I watched this 20 minute video all through, and given my usual time to kill for video is 30 seconds you should take that as the recommendation it is.

The scales have fallen from my eyes How could've blindness struck me so LLM's for sure bring more than lies They can conjure more than mere woe All of us now, may we heed the sign Of all text that will come to alignWatched this without sound, as I had opened the tab and wasn’t paying attention, but did you really just suggest a video where they are drinking out of a clearly empty cup? I closed it in disgust.

There’s a bit in the beginning where he talks about how actors handling and drinking from obviously weightless empty cups ruins suspension of disbelief, so I’m assuming it’s a callback.

Yes, I was making a joke, as somebody who also gets unreasonably annoyed by those things. I felt seen.

i love you tom seven

posted right on the dot of 00:00 UK time

Well of course, it is automated.

Wait, you did automate this right?

i’ll have you know all our sneers are posted artisanally

each sneer is drafted and redrafted for at least thirty hours prior to exposure to the internet, but in truth, that’s only the last stage of a long process. for example, master sneerers often practice their lip curls for weeks before they even begin looking at yud posts

it’s only a service account and a couple lines of bash away! but not automating for now makes it easier to evolve these threads naturally as we go, I think, and our posters being willing to help rotate and contribute to these weekly threads is a good sign that the concept’s still fun.

“I was there for the stubsack” is also definitely something you can get on some niche shirts that very few would get

the best kind

class SneerFactoryFactory

and note how the text slightly evolves with time too

I have been! Wanted to do it with mine but didn’t have the headspace to make it so. soon.gif

I hadn’t paid enough attention to the actual image found in the Notepad build:

Original neutral text obscured by the suggestion:

The Romans invaded Britain as th…

Godawful anachronistic corporate-speaky insipid suggested replacement, seemingly endorsing the invasion?

The romans embarked on a strategic invasion of Britain, driven by the ambition to expand their empire and control vital resources. Led by figures like Julius Caesar and Emperor Claudius, this conquest left an indelible mark on history, shaping governance, architecture, and culture in Britain. The Roman presence underscored their relentless pursuit of imperial dominance and resource acquisition.

The image was presumably not fully approved/meant to be found, but why is it this bad!?

as if intened audience is supposed to know what’s wrong

I mean notepad already has autocorrect, isn’t it natural to add spicy autocorrect? /s

Sure you have used autocorrect, but have you heard of its better brother? autolesscorrect?

autoincorrect

morewrong.aiembed in the orange site so hacknernewses can incorrect each other automatically

Microsoft announced that 2024 will be the era of the AI PC, and unveiled that upcoming Windows PCs would ship with a dedicated Copilot button on the keyboard.

Tell me they’re desperate because not many people use that shit without telling me they’re desperate because not many people use that shit.

consider upside: Hyper key

feels like it’s lining up to be the WinME of this decade

Off topic:

Went to Bonnaroo this weekend and didn’t have to think about weird racist nerds trying to ruin everything for four whole days.

It was rad af and full of the kind of positive human interaction these people want to edit out of existence. Highly recommend.

I just passed a bus stop ad (in Germany) of Perplexity AI that said you can ask it about the chances of Germany winning Euro2024.

So I guess it’s now a literal oracle or something?? What happened to the good-old “dog picking a food bowl” method of deciding championships.

I guess that means AI is now on the same level as an octopus.

I asked my phone charger about the chances of the same

didn’t get an answer I could bet with, tho :<

facebook caught intimidating brazilian researchers - they found scam ads falsely referring certain brazilian federal programme https://www.theregister.com/2024/06/16/meta_ads_brazil/

Asked to comment, a Meta spokesperson told The Register, “We value input from civil society organizations and academic institutions for the context they provide as we constantly work toward improving our services. Meta’s defense filed with the Brazilian Consumer Regulator questioned the use of the NetLab report as legal evidence, since it was produced without giving us prior opportunity to contribute meaningfully, in violation of local legal requirements.”

translation: they knew we would either squash the investigation attempt outright or change their research methodology and results until we looked like the good guys, and that kind of behavior cannot be tolerated