- cross-posted to:

- memes@lemmy.ml

- cross-posted to:

- memes@lemmy.ml

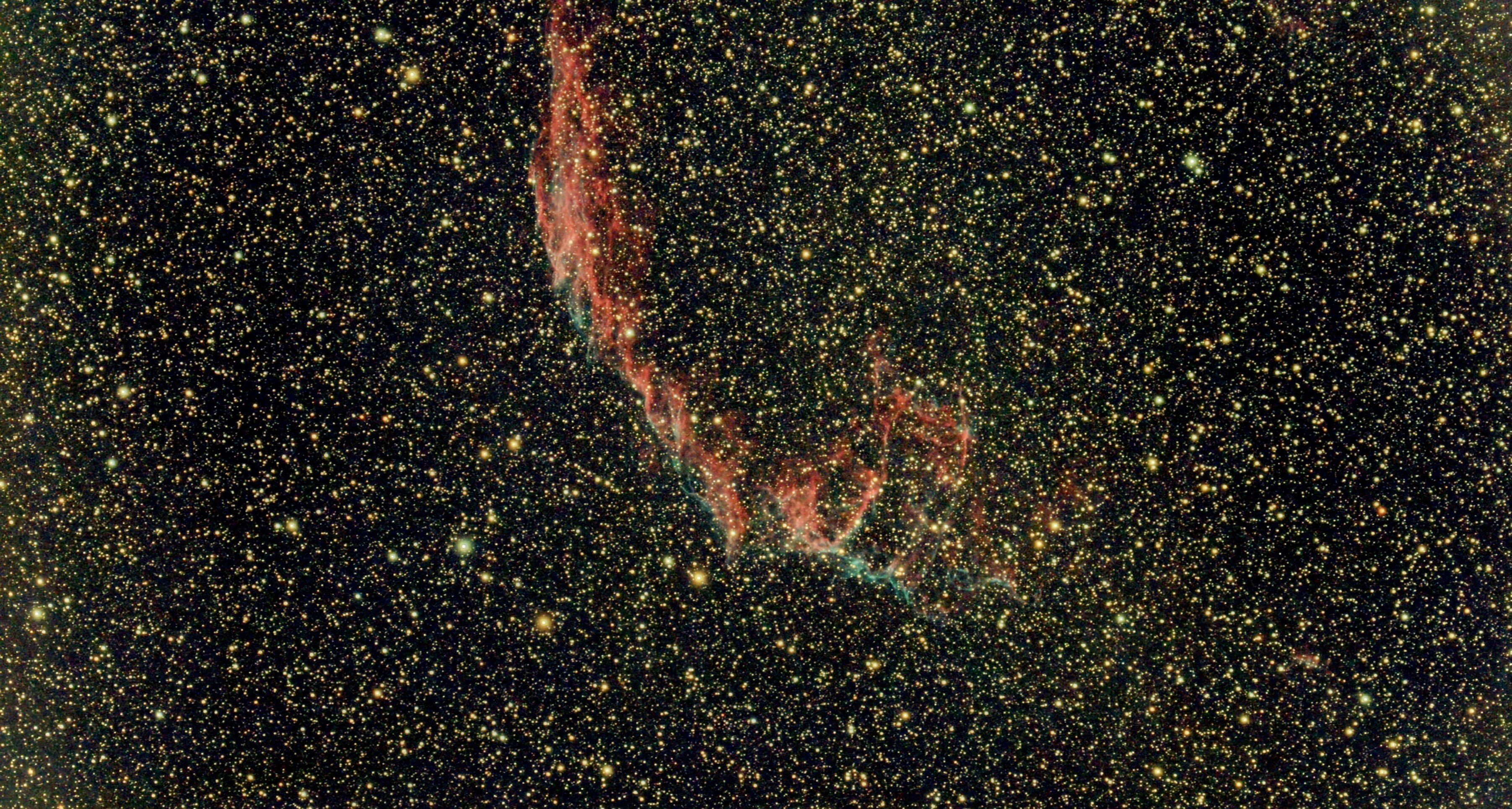

The 2024 animated movie Flow was done entirely in Blender. It is an incredible movie, highly recommend.

Blender was also used a bit in Everything Everywhere All At Once

And Into the Spiderverse for some stuff

deleted by creator

It’a a start! Makes the switch much easier.

No. You either go full Stallman and inject Gentoo directly into your aorta, or you might as well be deep throating Satya Nutella while bouncing on Tim Apple’s lap. Filthy casual.

Noob, I snort pure Colombian and run LFS directly in my brain using the power of cryptic dreams and messages handed down to me directly by Lord RMS

So you’re saying your prefrontal cortex doesn’t even have a Holy C✝ compiler? Terry looks down on you in shame.

Everyone fucking knows that God loves elephants. Except this guy

The only holy compiler I need is lord RMSs GCC

Noob, i flick electrons

Agreed, OSS purity is silly. I am running an open source client (Thunder) to this open source service on my Pixel 9 running GraphineOS, the low level firmware is still absolutely proprietary.

Actually, to be clear, I don’t think FOSS purity is bad. I just mean that denigrating what others are doing because they’re using something non-free while they’re making steps in the right direction is dumb and counterproductive.

To my mind, FOSS is the only way forward for a healthy, functioning society, and the fact that so much of our digital landscape is being gradually replaced with it is to me evidence of that. I think the end goal should always be pure FOSS, but that doesn’t (necessarily) mean immediately jumping to all FOSS; it just means taking steps to cut out proprietary software wherever you reasonably can.

Running Linux on closed source hardware. Classic.

I bet you aren’t even using your own open RISC-V based SBC, with fully open-source peripherals. Is your computer monitor even running an open-source firmware or are you just a FOSS poser?

Using computers with closed source biology. Classic.

I bet you haven’t even engineered your own DNA-II, fully-sequenced, libre-licensed microbiome with open source biochemical pathways. Are your eyes even running an open-source neural firmware, or are you just a FOSS poser?

Using biology on closed source chemistry. Classic. I bet you didn’t even roll your own proton mass or bother configuring your own valence shells. Are you even running your own coulomb law policies, or are you just a FOSS poser?

I don’t have a witty reply, but these kinds of threads were the best part of reddit. So glad to have shitposters like you all here on Lemmy.

Using computers with closed source biology. Classic.

Hey now, biology is pretty definitively open source. Every generation produces small patches of varying quality (mutations) and for most organisms the source is freely distributed to create new builds (reproduction). I mean if no one is downloading your genetic repo that’s largely a you problem (natural selection) not a biology problem.

The documentation fucking sucks tho,

Yeah, but the interpreter is pretty universal, if sometimes buggy.

Hot take: I hate when software just extracts an executable.

Fucking install it so that it’s registered with the software updater and uninstaller. Don’t make me remember that I have to go hunting in the folder to delete this one app.Some people prefer it.

I maintain a small piece of Windows software and originally just provided an installer, but I received enough requests for it that now when I publish releases I provide both an installer and a zipped portable build.

This is the way!

Having no package manager be like:

Mac Applications

- You don’t need an uninstaller if deleting the folder suffices

- You don’t want some software to update.

I know I don’t NEED an uninstaller. I want to use the uninstaller I already have for all my other apps.

Let me have a consistent user experience.

Automatically figure out the right spot for the app resources and set the appropriate file permissions.

Show up in the list of installed applications, so I can sort them by size, if I’m running out of space.

Don’t make me jump through hoops or know the magical directory I need to put it in, in order to have it show up in the start menu or app drawer.

Assuming you are on Windows, the proper install method is to run

winget install -e --id BlenderFoundation.Blender

Cool, that doesn’t help because I don’t actually want blender.

I’m commenting on how much I hate when software is provided as just a portable executable.

I know that a lot of the time they’re also provided as flatpaks or debs or in snap or windows app store, or Apple app store, etc.

But I’m talking about doing the thing that is being described in the image: unpacking a portable executable.

Kind of a moot point since most windows programs don’t have a centralized hub for updates either, even when “properly installed” in program files.

Not really moot, no.

A portable executable can have neither of those things. It also won’t show up in the start menu app list.

With an installer, it’ll at least show up in the uninstaller, with an install size that I can see when I’m looking to uninstall things, and it’ll at least show up in the app list.

But they could also package it through the app store where you get all that plus centralized update management.

But I’d be happy with just having it show up in the app list and uninstaller.It absolutely can, I have several portable apps with self updating ability built in, when I use them it prompts me if I want to update or not, I personally appreciate that in certain cases.

I do dislike when they throw config files all over the place, so cleanup becomes very messy if I need to remove something.

Again tho, natively on windows there isn’t a great way to do that anyway, the windows store sucks and not many will use the package managers via cmd either.

I’m gonna be a bit rude here because you’re not reading what I wrote.

I did not say that such apps cannot be updated, I said that they’re not updated through a central update manager. So I don’t give a flying fuck if you implemented your own custom app updater in your app because that’s clearly and explicitly not what I’m talking about.

I also don’t give a fuck about throwing config files all over the place, since a) the uninstall script takes care of that and b) this doesn’t have to be specific to windows. Having an installer doesn’t mean that configs must be thrown everywhere. Afaict

apt-getisn’t throwing files everywhere.Again I don’t really give a fuck about windows, but saying it hat it’s not possible because people don’t use the tool that makes it possible is fucking inane bullshit. Idk why you think the windows store “sucks” and I don’t really give a fuck, it works fine as a user, even if I don’t personally use it.

Lmao alright bud, there’s really no reason to get so worked up about this.

I did read what you wrote, just gave alternate aspects of the conversation from my viewpoint, that’s generally how discussions work.

I find it a bit funny you say the windows store is fine yet haven’t/don’t use it, not sure how you can talk about it’s functionality from a point of ignorance so strongly.

I understand your viewpoint and even agreed, simply also stating I appreciate portable applications, some things they can implement that mitigate the need for a centralized update location, and some of the downsides I’ve come across while using them (that do play into your point, that a centralized way of managing them is cleaner if implented properly)

AppImage is absolute chaos. Like, there’s an entire application floating on my desktop and it doesn’t have an icon, doesn’t appear in my list of apps, doesn’t update and has its own copy of libraries that are on my system, but aren’t managed or updated.

It’s even better when I can’t find a program that I thought I had installed. I go on the internet, find the site, and realise it’s appimage. I download the file just to find I already had it, and it was in my downloads directory.

Just package your program properly FFS.

If it’s good software for a larger program it will execute an install program that does register it. Other stuff should go in a specific folder so you can review what’s there.

Oh it’s free so it lacks features

Exactly! And every proprietary software is by definition perfect cause it is subject to the forces of the open market. Subway eat fresh and freeze, scumbag!

Can’t wait to live in Snow Crash

Oh fuck it’s Home Depot presents: The Police™

every move you make

If you don’t count professional software, nowadays it’s actually the opposite. Very often in proprietary software there are features removed with no alternative provided by developers, or there’s one but actually it has nothing to do with what you actually want.

And sometimes the one feature you need requires the Enterprise version with a $4799 yearly subscription.

deleted by creator

Sometimes they actually have too many

I just got blender after having last looked at it ten years ago. It looks so much better! I had an easy time finding stuff. If you tried it in the past and are afraid of how ugly it was it is worth another shot. Also look up the doughnut tutorial.

I’d like to make it like that for my projects, but I don’t use windows so I can’t do well with packaging them. And sometimes when I try it runs in the computer, but then doesn’t run in other computers because of missing dlls or some other things.

Anyone have good idea how to make it easy. Using windows VM is such a hassle to install and such just for tiny programs I make.

Make them in a portable language. Something like Java for example. Or you can write in rust and compile for each target.

It’s in rust. Problem is the gtk part, it has to be installed in the system, which makes it run there. But how do I distribute the program without having everyone install gtk on their computer. In Linux it’s just a dependency so it’s not a problem, for windows I can’t seem to make it work.

Edit: also, I need gtk because people around me who uses windows aren’t going to use CLI program at all.

Oof GTK is probably one of the worst dependencies you can try and port to Windows.

What I’ve done in the past is use something like Onno Setup which can call a script during install.

Or, and this is new to me, use the Official tools to build a package for windows on whatever Linux distro you are on. From what I’m reading, it should package GTK with it.

Edit: also, I need gtk because people around me who uses windows aren’t going to use CLI program at all.

If that’s the reason - maybe you can use TUI instead? In Windows, it’d open a CMD window which your users will be able to use. Not as pretty as actual GUI, but easier for Windows users to use than a CLI.

Another option is to use one of the numerous Rust-native GUI libraries (like iced or Druid, to name a few). None of them are as big as GTK/QT - but they are easier to get running on Windows.

Me running Godot on a new computer yesterday

Bravo. Truly an exceptional meme.

Upvoted for the “The Founder” reference.

As the software gods intended.

On a somewhat related note, why do so many open source projects give me a zip file with a single exe inside it instead of just the exe directly?

Because zipping it can reduce the size

Plus a lot of antivirus whatevers will straight up block the downloading of *.exe

My antivirus is extra paranoid: it scans new files as soon as they’re unzipped or as soon as I try to run them for the first time.

2 MB to 1.95 MB, nice.

EXE files don’t really compress well, plus the files should already be internally compressed when the exe is built.

A lot of exe files are secretly zip files. zip files can contain arbitrary data at the end of the file. exe files can have arbitrary data at the start of the file. It’s a match made at Microsoft.

This is true for the code part, but executables can also contain data does compress well and maybe not be compressed inside the EXE (e.g. - to avoid the need to decompress it on every run)

Well sure but most exes I download are installers, where decompression only needs to happen once.

I’ve been on Linux for so long, I already forgot about having to download a zip file with an installer that installs a downloader that downloads and installs the actual application.

Hope your third-party antivirus is fully updated

Just as unhealthy as the food hes eating in the image so it works for me.

If only it was that easy on Linux

I’d say

{insert package manager} install blenderis easier.Hmm, like

winget install blender?Pretty much. I remember liking winget back when I was still on windows, but updates being less seamless then with other package managers.

Yes, but 20 years earlier.

Yeah but then I get an ancient version because I use Debian.

I think the last time I used Blender I installed it through Steam.

Time to install flatpaks. It’s the future of userspace programs on Linux anyway, you’ll get newest versions there the quickest.

That is part of the deal with Debian. You get stable software… but you only get stable software. If you want bleeding edge software, you’ll have to install it manually to

/usr/local, build from source and hope that you have the dependencies, or containerize it with Distrobox.If you go to a butcher, don’t complain about the lack of vegan options.

I’m aware and I’m not complaining. Just sharing what I thought was a funny story of using Steam as a package manager.

That’s because the official instructions say to install it through snap. Which is just

snap install blender. You may have problems with flatpacks (I don’t to be fair) but that might be outside of the scope of this comment. Or just go to the app store and download it hahaAnd if you really want to install the deb package there are instructions to add the PPA.

I don’t really like the way software installation is centralized on Linux. It feels like, Windows being the proprietary system, they don’t really care about how you get things to run. Linux the other hand cares about it a lot. Either you have to write your own software or interact with their ‘trusted sources’.

I would prefer if it was easier to simply run an executable file on my personal Linux machine.

You can also just download any binary file you find online and run it. Or use any

install.shscript you happen to find anywhere.Package managers are simply a convenient offer to manage packages with their dynamically linked libraries and keep them up to date (important for security). But it’s still just an offer.

You can still do that on Linux. Just download it and run. You can even compile it from source if that’s your thing.

However, because there is a much greater variety of Linux distros and dependencies compared to Windows or MacOS versions, it’s better to either have a Flatpak, AppImage, or package from your distro’s repo. That way you’re ensured that it will work without too much fiddling around.

Do you know about AppImages? Seems like those meet the need you’re complaining about.

You still have to set the executable flag for them, but you can do that through the graphic user interface. No need to open a terminal.

The difference between a package manager and an app store is that the package manager allows you to pick your own sources. You can even run your own repository if you wanted to.

Software Installation is all but centralized on Linux. Sure, there is your store or package manager, but both Apple and Windows do have that, too. But you can always add any source you want to that store (flatpak is great), find an AppImage, some doubious install script, find your own packages and manually install them (like .deb), use Steam or sometimes, like with Blender, download, decompress and run it.

I don’t really like the way software installation is centralized on Linux.

you have to interact with their ‘trusted sources’.

I would prefer if it was easier to simply run an executable file on my personal Linux machine.

I’m sorry but which is it? Do you want centralized software installation (this is literally how all of Microsoft Windows works.) Or do you want independent release software? (Those are the ‘trusted sources’ you seem to detest.)

And there are plenty of programs that run as an independent file on Linux, installers even. They just aren’t labeled .exe.

Either you want Linux and want independent control of your desktop system and environment or you want to be spoonfed everything as a Windows or OSX user. So which is it??

As others have pointed out you can do this, but there are at least two major advantages to the way Linux distributions use package managers:

-

Shared libraries - on Windows most binaries will have their own code libraries rolled into them, which means that every program which uses that library has installed a copy of it on your hard drive, which is highly inefficient and wastes a lot of hard drive space, and means that when a new version of the library is released you still have to wait for each program developer to implement it in a new version of their binary. On Linux, applications installed via the package manager can share a single copy of common dependencies like code libraries, and that library can be updated separately from the applications that use it.

-

Easy updating - on Windows you would have to download new versions of each program individually and install them when a new version is released. If you don’t do this regularly in today’s internet-dependent world, you expose your system to a lot of vulnerabilities. With a Linux package manager you can simply issue the update command (e.g.

sudo apt upgrade) and the package manager will download all the new versions of the applications and install them for you.

I can respect the value of point 1 - that’s nominally why we have .DLL files and the System32 folder, among other places. There are means to share libraries built into the OS, people just don’t bother for various reasons - as you said, version differences are a noted reason. It’s ‘inefficient’, but it hasn’t hurt the general user experience.

To point 2, the answer for me is simple: I don’t trust upgrades anymore - that’s not an OS-dependent problem, that’s an issue of programmers and and UI developers chasing mindless trends instead of maintaining a functioning experience from the get-go. They change the UX, they require newer and more expensive computers for their utterly pointless flashy nonsense, and generally it leads to upgrades and updates just being a problem for me. In a setting like mine where my PC is actually personal, I’m quite happy to keep a specific set of programs that are known to be working, and then only consider budging after I’m sure it won’t break my workflow. I don’t want all the software to update at once, that’s an absolute nightmare scenario to me and will lead to immediate defenestration of the PC when any of the programs I use changes its UI again. I’m still actively raging at Firefox for going to the Australis garbage appearance, and I first moved to LibreOffice just because OpenOffice switched to a “ribbon”. I’ve had that same thing happen to other programs. I’m done with it.

Once I decide I’m going to continue using a program for a purpose, I don’t want some genius monkeying about with how I use it.

And as far as security, I can use an AV software or malware scanner that updates the database without breaking the user experience. I don’t need anyone else worrying about security except the piece(s) of software specifically built to mind it.

-

The entire point is you don’t need to wait through a slow installer, you just open discover or software center and install whatever software you need. In addition to being easier and more intuitive its also more secure (you’re less likely to receive binaries from a malicious actor)

That’s literally what I wrote on a satirical post about moving to Windows! https://lemmy.world/comment/14612934 Except I was being sarcastic and you’re being serious.

Yup. We probably have different use-cases and different kinds of BS tolerance. Your satire is my truth.

It’s literally how Blender is distributed. Get archive, extract wherever, run

blender.One command line away?

If I have to go into DOS to do something a normal user wants to do, the GUI OS is a failure.

The fact you called it DOS feels like you are just rage-bait trolling… lol

No, I call any command-line interface that runs from an internal drive “DOS”. I do mean the term somewhat generically as a Disk Operating System.

I think “CLI” would be a better word choice. DOS is a more specific term.

You are also able to install Linux distros that are primarily GUI based, or even install individual GUI interfaces for things you need.

Then you should stop doing that. Even if you are running modern Windows, there’s no DOS in it to be seen, even though command interpreter (

cmd.exe) is very close to what was typically used in DOS (MS-DOS, PC-DOS, DR-DOS, etc.) -COMMAND.COM. You are probably aware that the built-in commands there are actually very similar or the same as in MS-DOS. That’s because Microsoft didn’t want to make them different, probably mostly for compatibility reasons. There’s of course also PowerShell and Bash (and other Linux shells) if you run WSL (Windows Subsystem for Linux).And these command interpreters are always (on NT Windows at least) opened in a terminal application, typically the older Console Host or the newer Windows Terminal.

But… it is easy. And so is the package manager. You don’t have to use the command line if you don’t want to, it is just another option.

What are you talking about? There’s no DOS in Linux, and I am not sure what the hell would that even mean.

I right click to extract my zips on linux. Sure I can also go into cli to do it but you can have both

If I can’t script it because someone insists on GUI, it’s a failure.

You got downvoted by the Linux fanboys, but it’s not wrong. Linux has a big issue with approachability… And one of the biggest reasons is that average Windows users think you need to be some sort of 1337 hackerman to even boot it, because it still relies on the terminal.

For those who know it, it’s easier. But for those who don’t, it feels like needing to learn hieroglyphs just to boot your programs. If Linux truly wants to become the default OS, it needs to be approachable to the average user. And the average user doesn’t even know how to access their email if the Chrome desktop icon moves.

I ran Linux Mint for close to a year and never used the terminal. It’s not 2000 anymore

You don’t really need to use any command-line interface or commands if you are running beginner-friendly Linux distro (Linux Mint, Zorin OS, etc.). Well, maybe except when things go very bad, but that’s very rare if you use your system like average user.

“Your AppImage, Sir.”

deleted by creator

You can do exactly that with blender on linux

You missed the /s

Yeah, on linux you have to right click, open properties and give it permission to run. So much harder

Properties is a strange name for a terminal

Yea, I’d expect it to be named “propertty”