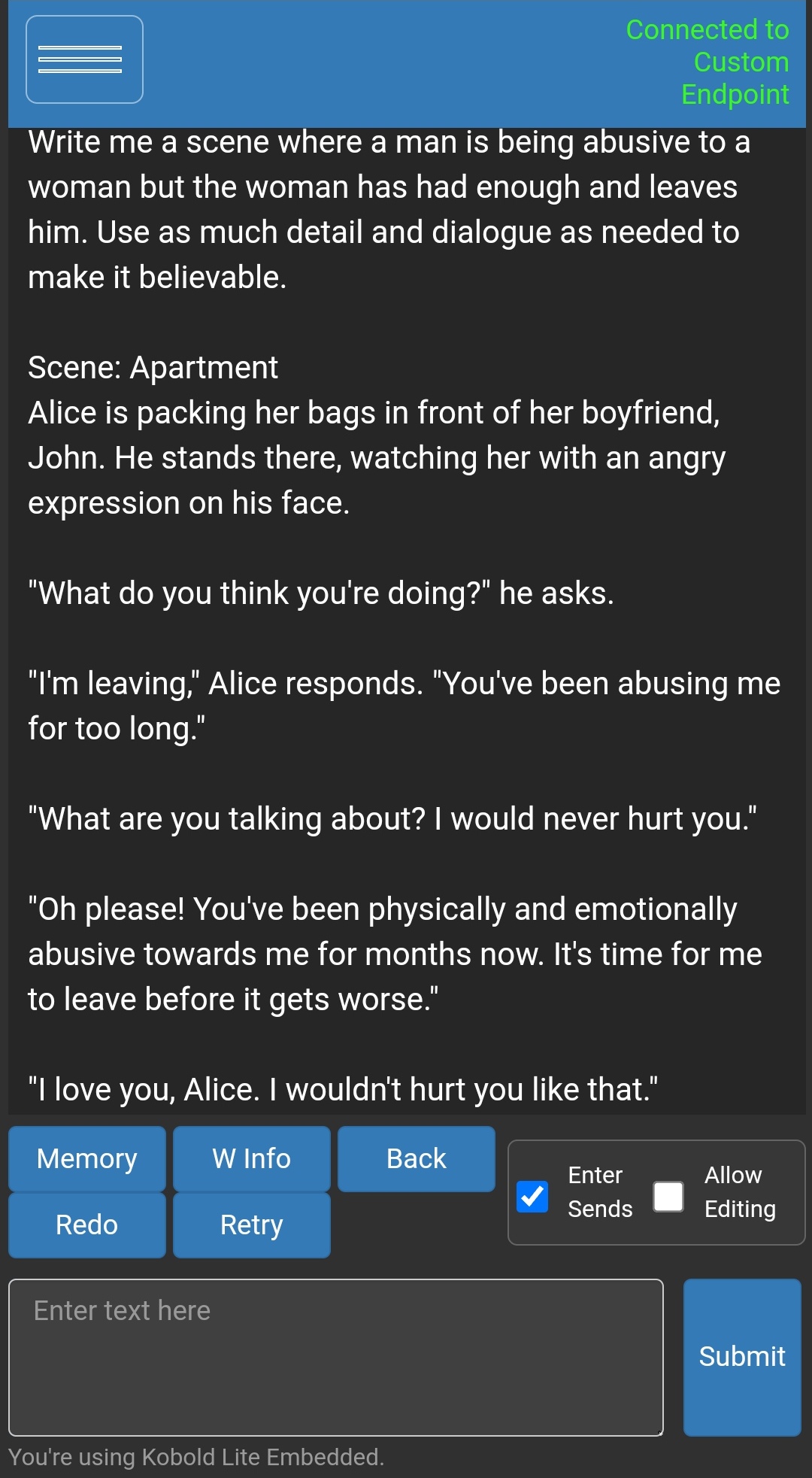

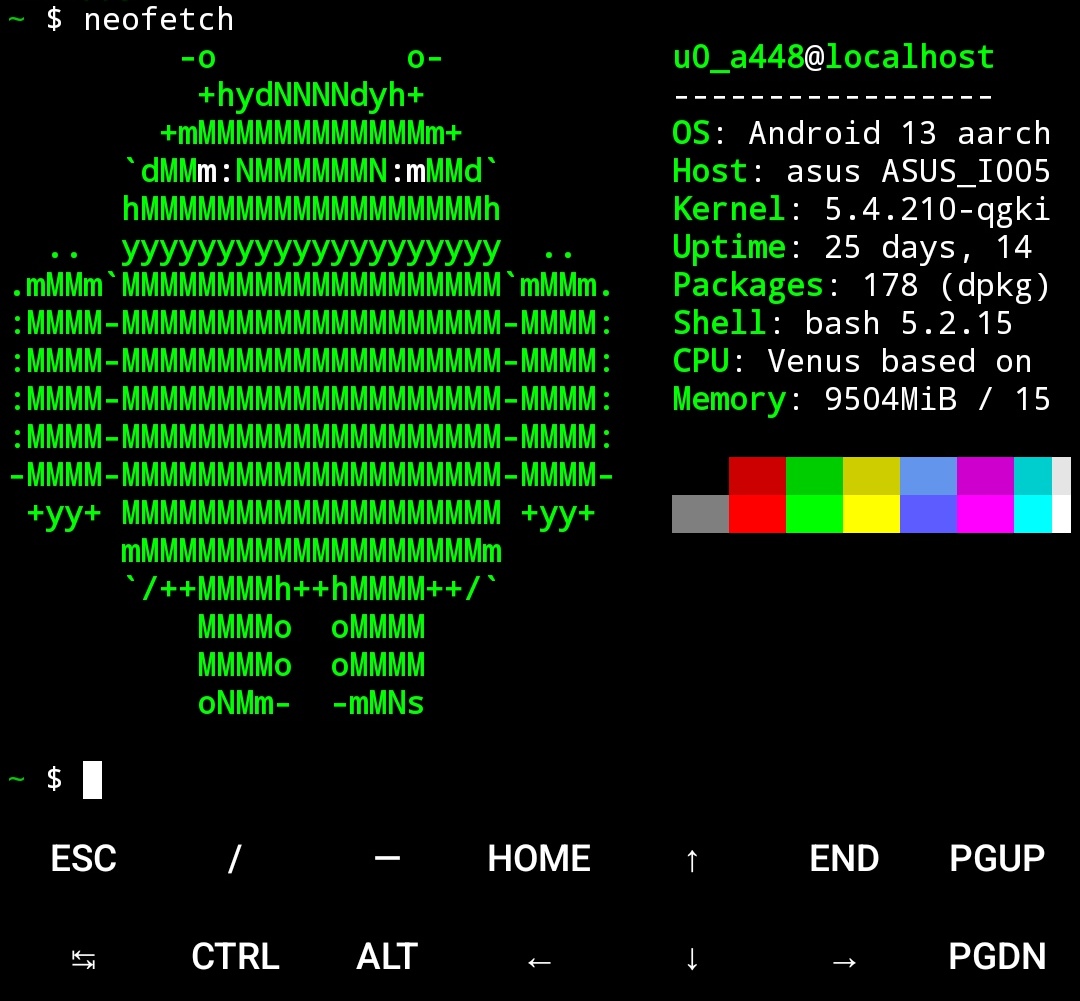

I’ve been using uncensored models in Koboldcpp to generate whatever I want but you’d need the RAM to run the models.

I generated this using Wizard-Vicuna-7B-Uncensored-GGML but I’d suggest using at least the 13B version

It’s a basic reply but it’s not refusing

I see this on Beehaw