deleted by creator

“But certainly I can do something with this banana?” you think in a panic as your deadline rapidly approaches…

“After all, it is a lovely, three-fingered banana!“

Bullshit, AI still can’t do fingers.

That’s why prompt engineers exist. They’ll be trained to use a specific AI and know the ins and outs of it - until the next update. It’ll just create drones like those who can only use Adobe or Malus products and are stuck on Macs and Photoshop.

and that is bullshit because the same prompt does not produce the same response. So they’re just throwing stuff it a wall to see what sticks long enough for them to get paid

I think you missed OP’s sarcasm here.

I’ve yet to use AI in my workflows. Nothing against it, but I haven’t seen the value beyond maybe boilerplate code, in which case I prefer the tried-and-true copy, paste, and modify. Why have AI do that and introduce its own mistakes?

I have noticed younger developers using AI and sometimes I’ve had to help them with the mistakes it makes. It’ll just come up with modules and function calls that don’t exist. Felt like it was less precise version of stack overflow with less context awareness. Programmers that were too dependent on stack overflow were already coming up with poorly mashed together code and this may just be a more “efficient” version of that.

I think you’ve nailed it

There is a long, long history in this industry, going back to COBOL, at least, of “just one magic tool, bro, and we can get all of the non-programmers to make their own software.”

It hasn’t happened yet

Having dealt with turning requirements into software for 25 years myself, I can say that it will never happen.

Primarily because most business people can’t even define the problems they’re trying to solve, let alone define a solution for it.

Half my job seems to be digging back up towards them to get at the real crux of any issue.

Exactly!

It doesn’t help that AI is shit and millionaires are so divorced from reality.

Missing an extra input of huge amounts of energy.

Not even just in computing power, which is what I usually see referenced in these discussions. I work in the longhaul internet business and the amount of bandwidth these AI companies are asking for is insane. The power we need to run all that transport gear is astronomical and it’s not localized. You need an amplifier every 100 km or so and that’s the easy part to account for. Their data centers are going to need dedicated nuclear plants to keep growing the way they are.

Dude they’re talking about gigawatt campuses for AI.

Like, multiple companies, multiple locations.

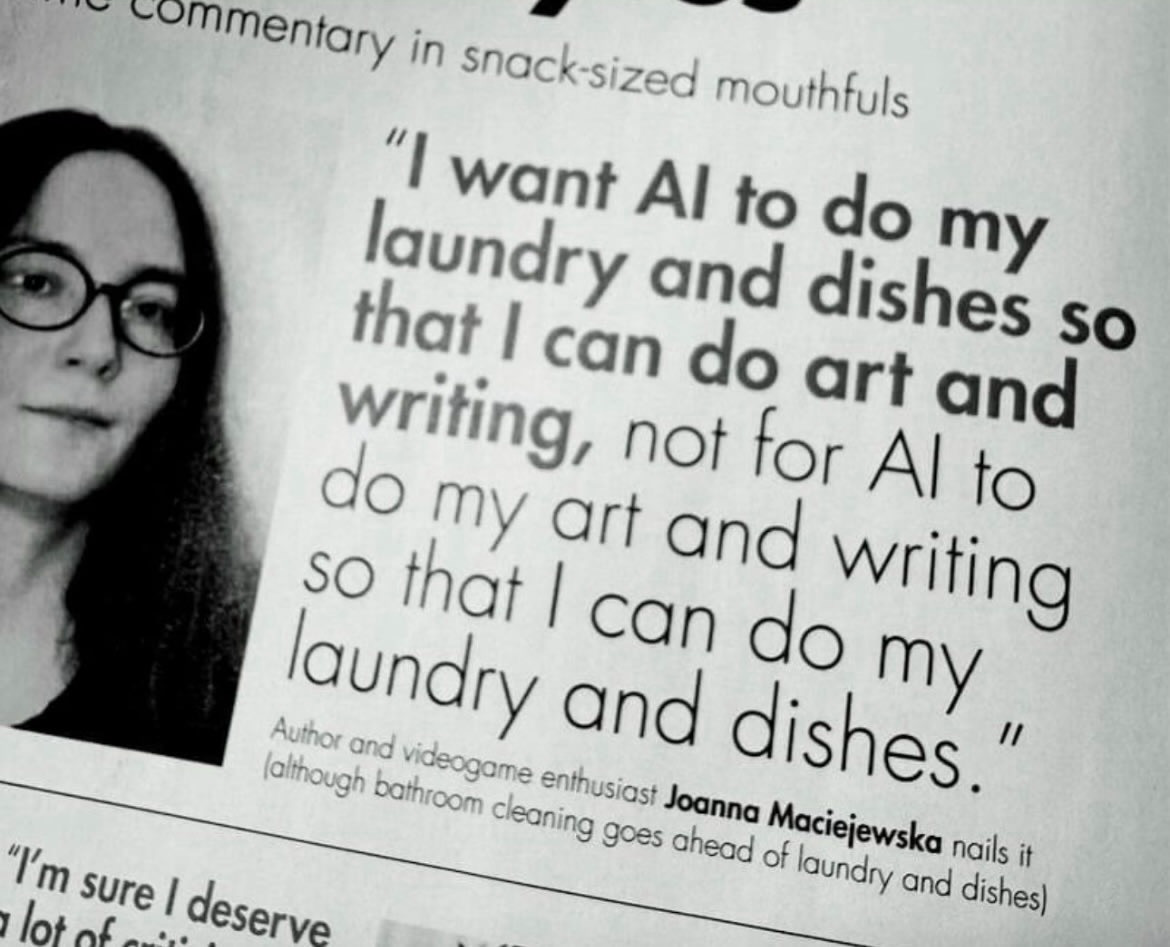

The only people who think AI will ‘lower a workload’ or be helpful, are the people who don’t do any of that work in the first place.

AI has become just another bullshit buzzword for management idiots to dick around with to make it look like they’re actually doing something.

From my experience, whenever someone says they used AI to do a task, it means I need to check their work twice to unfuck it. So AI effectively ends up creating more work, not less.

Well I’m convinced. My VC firm, DipshitTechbros, LLC will invest eleventy quazillion dollars in whatever you got.

You know the really sad part? If your pitch was “it makes people work harder and less efficiently… but with AI,” you would probably find some interested VCs.

I do think it has potential to reduce workload in specific jobs. E.g. in stuff like recognizing patterns in input data for data analysts. But only to a small degree and defintly not at every job.

But any company that actually uses chatgpt itself must be crazy or really not have a problem with data breaches.

The “old pile of complexity” will be bigger, consisting of vaguely code-shaped papier-mâché constructions no human mind designed, which the harried engineers will have to maintain and figure out which parts work correctly. Oh, and there’ll be fewer of them and they’ll be paid less, because management knows that with AI, their job is much easier and less demanding.

It’s fucking worse. I use ChatGPT sometimes when I’m lazy. I ask it a question and it gets me within 50 feet of the answer. I then do Google searches for the rest, but I don’t remember shit after that. It’s the worst for retention. It’s like using Google maps for everything and not knowing how to navigate without it. Old timers like to work on things without reading manuals because they’ll remember how it works after spending time to really understand the problem.

Old timers like to work on things without reading manuals

We got that from the video games we played as a kid.

As far as I’m still concerned, the enemies in Super Mario Bros. are mushrooms and turtles. Because we didn’t read the damn manuals.

As a C64 player it took me years to find out that manuals even exist. :)

Yeah except the sadder engineer pay should go up because lots of them will quit or retire early and the new ones aren’t going to know how the pile of shit works by osmosis. Unionizing also wouldn’t hurt.

Unionizing is inevitable. IT fucked up royally.

Hmm how about we lay everyone else off and make one engineer do the job of an entire team?

Don’t make me make the project manager show you the triangle again

The only way AI can “help” with coding is if the content it produces is essentially vanilla boilerplate stuff that you work from, and it requires no additional effort from those actually doing coding work.

Everything AI generates for code should be scrutinized and intensely reviewed before being merged.

Ai is great for documenting and commenting code after it has been created.

I think the AI code gen tools can be great. But, you have to understand and be able to take what they give you and actually build something coherent with them, because (at least with the current generation) they clearly have pretty firmly bounded limits to what they can generate and figure out.

I actually think this makes a huge advantage for the previous generation of engineers, who didn’t grow up with them. Because we all spent time sitting around creating octree classes and ring buffers, new ones with incredible amounts of repeated effort for every new project, we actually had to learn to be comfortable with reading and understanding and writing code. The muscles had to get strong. I feel like, whether or not AI progresses (soon) to the point that it can make a whole codebase for you and it’ll all work, the engineers who grew up having to develop strong coding muscles will always have some level of advantage.

It’s like the old-school carpenters who can knock in a nail with 3 hammer strikes and have everything organized in their minds to have what they need in their tool bag every single morning and not have to go and get something new. You can always learn to use the power tools. You can’t go back and force yourself through the time consuming apprenticeship to work out how to work without them, though, once they exist.

AI: a stew of turds, mostly old problems wrapped in new problems for no good reason.

deleted by creator

Only if the person is the owner of the app, not the user