- cross-posted to:

- techtakes@awful.systems

- cross-posted to:

- techtakes@awful.systems

Thank fucking god.

I got sick of the overhyped tech bros pumping AI into everything with no understanding of it…

But then I got way more sick of everyone else thinking they’re clowning on AI when in reality they’re just demonstrating an equal sized misunderstanding of the technology in a snarky pessimistic format.

As I job-hunt, every job listed over the past year has been “AI-drive [something]” and I’m really hoping that trend subsides.

“This is an mid level position requiring at least 7 years experience developing LLMs.” -Every software engineer job out there.

That was cloud 7 years ago and blockchain 4

Reminds me of when I read about a programmer getting turned down for a job because they didn’t have 5 years of experience with a language that they themselves had created 1 to 2 years prior.

Yeah, I’m a data engineer and I get that there’s a lot of potential in analytics with AI, but you don’t need to hire a data engineer with LLM experience for aggregating payroll data.

there’s a lot of potential in analytics with AI

I’d argue there is a lot of potential in any domain with basic numeracy. In pretty much any business or institution somebody with a spreadsheet might help a lot. That doesn’t necessarily require any Big Data or AI though.

I’m more annoyed that Nvidia is looked at like some sort of brilliant strategist. It’s a GPU company that was lucky enough to be around when two new massive industries found an alternative use for graphics hardware.

They happened to be making pick axes in California right before some prospectors found gold.

And they don’t even really make pick axes, TSMC does. They just design them.

They just design them.

It’s not trivial though. They also managed to lock dev with CUDA.

That being said I don’t think they were “just” lucky, I think they built their luck through practices the DoJ is currently investigating for potential abuse of monopoly.

Yeah CUDA, made a lot of this possible.

Once crypto mining was too hard nvidia needed a market beyond image modeling and college machine learning experiments.

They didn’t just “happen to be around”. They created the entire ecosystem around machine learning while AMD just twiddled their thumbs. There is a reason why no one is buying AMD cards to run AI workloads.

One of the reasons being Nvidia forcing unethical vendor lock in through their licensing.

I feel like for a long time, CUDA was a laser looking for a problem.

It’s just that the current (AI) problem might solve expensive employment issues.

It’s just that C-Suite/managers are pointing that laser at the creatives instead of the jobs whose task it is to accumulate easily digestible facts and produce a set of instructions. You know, like C-Suites and middle/upper managers do.

And NVidia have pushed CUDA so hard.AMD have ROCM, an open source cuda equivalent for amd.

But it’s kinda like Linux Vs windows. NVidia CUDA is just so damn prevalent.

I guess it was first. Cuda has wider compatibility with Nvidia cards than rocm with AMD cards.

The only way AMD can win is to show a performance boost for a power reduction and cheaper hardware. So many people are entrenched in NVidia, the cost to switching to rocm/amd is a huge gamble

Imo we should give credit where credit is due and I agree, not a genius, still my pick is a 4080 for a new gaming computer.

Go ahead and design a better pickaxe than them, we’ll wait…

Go ahead and design a better pickaxe than them, we’ll wait…

Same argument:

“He didn’t earn his wealth. He just won the lottery.”

“If it’s so easy, YOU go ahead and win the lottery then.”

My fucking god.

“Buying a lottery ticket, and designing the best GPUs, totally the same thing, amiriteguys?”

In the sense that it’s a matter of being in the right place at the right time, yes. Exactly the same thing. Opportunities aren’t equal - they disproportionately effect those who happen to be positioned to take advantage of them. If I’m giving away a free car right now to whoever comes by, and you’re not nearby, you’re shit out of luck. If AI didn’t HAPPEN to use massively multi-threaded computing, Nvidia would still be artificial scarcity-ing themselves to price gouging CoD players. The fact you don’t see it for whatever reason doesn’t make it wrong. NOBODY at Nvidia was there 5 years ago saying “Man, when this new technology hits we’re going to be rolling in it.” They stumbled into it by luck. They don’t get credit for forseeing some future use case. They got lucky. That luck got them first mover advantage. Intel had that too. Look how well it’s doing for them. Nvidia’s position over AMD in this space can be due to any number of factors… production capacity, driver flexibility, faster functioning on a particular vector operation, power efficiency… hell, even the relationship between the CEO of THEIR company and OpenAI. Maybe they just had their salespeople call first. Their market dominance likely has absolutely NOTHING to do with their GPU’s having better graphics performance, and to the extent they are, it’s by chance - they did NOT predict generative AI, and their graphics cards just HAPPEN to be better situated for SOME reason.

they did NOT predict generative AI, and their graphics cards just HAPPEN to be better situated for SOME reason.

This is the part that’s flawed. They have actively targeted neural network applications with hardware and driver support since 2012.

Yes, they got lucky in that generative AI turned out to be massively popular, and required massively parallel computing capabilities, but luck is one part opportunity and one part preparedness. The reason they were able to capitalize is because they had the best graphics cards on the market and then specifically targeted AI applications.

His engineers built it, he didn’t do anything there

The tech bros had to find an excuse to use all the GPUs they got for crypto after they bled that dry

If that’s the reason, I wouldn’t even be mad, that’s recycling right there.

The tech bros had to find an excuse to use all the GPUs they got for crypto after they

bled that dryupgraded to proof-of-stake.I don’t see a similar upgrade for “AI”.

And I’m not a fan of BTC but $50,000+ doesn’t seem very dry to me.

No, it’s when people realized it’s a scam

My only real hope out of this is that that copilot button on keyboards becomes the 486 turbo button of our time.

Meaning you unpress it, and computer gets 2x faster?

Actually you pressed it and everything got 2x slower. Turbo was a stupid label for it.

I could be misremembering but I seem to recall the digits on the front of my 486 case changing from 25 to 33 when I pressed the button. That was the only difference I noticed though. Was the beige bastard lying to me?

Lying through its teeth.

There was a bunch of DOS software that runs too fast to be usable on later processors. Like a Rouge-like game where you fly across the map too fast to control. The Turbo button would bring it down to 8086 speeds so that stuff is usable.

Damn. Lol I kept that turbo button down all the time, thinking turbo = faster. TBF to myself it’s a reasonable mistake! Mind you, I think a lot of what slowed that machine was the hard drive. Faster than loading stuff from a cassette tape but only barely. You could switch the computer on and go make a sandwich while windows 3.1 loads.

Oh, yeah, a lot of people made that mistake. It was badly named.

TIL, way too late! Cheers mate

It varied by manufacturer.

Some turbo = fast others turbo = slow.

That’s… the same thing.

Whops, I thought you were responding to the first child comment.

I was thinking pressing it turns everything to shit, but that works too. I’d also accept, completely misunderstood by future generations.

Well now I wanna hear more about the history of this mystical shit button

Back in those early days many applications didn’t have proper timing, they basically just ran as fast as they could. That was fine on an 8mhz cpu as you probably just wanted stuff to run as fast as I could (we weren’t listening to music or watching videos back then). When CPUs got faster (or it could be that it started running at a multiple of the base clock speed) then stuff was suddenly happening TOO fast. The turbo button was a way to slow down the clock speed by some amount to make legacy applications run how it was supposed to run.

Removed by mod

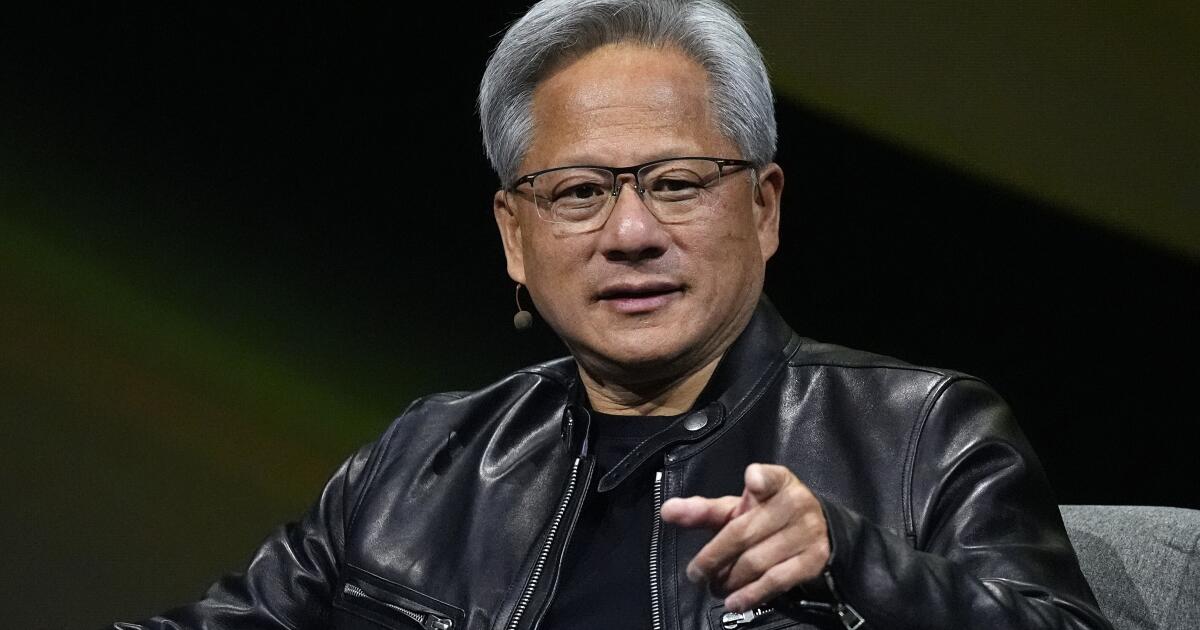

Shed a tear, if you wish, for Nvidia founder and Chief Executive Jenson Huang, whose fortune (on paper) fell by almost $10 billion that day.

Thanks, but I think I’ll pass.

I’m sure he won’t mind. Worrying about that doesn’t sound like working.

I work from the moment I wake up to the moment I go to bed. I work seven days a week. When I’m not working, I’m thinking about working, and when I’m working, I’m working. I sit through movies, but I don’t remember them because I’m thinking about work.

- Huang on his 14 hour workdays

It is one way to live.

That sounds like mental illness.

ETA: Replace “work” in that quote with practically any other activity/subject, whether outlandish or banal.

I sit through movies but I don’t remember them because I’m thinking about baking cakes.

I sit through movies but I don’t remember them because I’m thinking about traffic patterns.

I sit through movies but I don’t remember them because I’m thinking about cannibalism.

I sit through movies but I don’t remember them because I’m thinking about shitposting.

Obsessed with something? At best, you’re “quirky” (depending on what you’re obsessed with). Unless it’s money. Being obsessed with that is somehow virtuous.

Valid argument for sure

It would be sad if therapists kept telling him that but he could never remember

“Sorry doc, was thinking about work. Did you say something about line go up?”

I don’t think you become the best tech CEO in the world by having a healthy approach to work. He is just wired differently, some people are just all about work.

Some would not call that living

Yeah ok sure buddy, but what do you DO actually?

Psychosis doesn’t justify extreme privilege.

He knows what this hype is, so I don’t think he’d be upset. Still filthy rich when the bubble bursts, and that won’t be soon.

I’ve noticed people have been talking less and less about AI lately, particularly online and in the media, and absolutely nobody has been talking about it in real life.

The novelty has well and truly worn off, and most people are sick of hearing about it.

The hype is still percolating, at least among the people I work with and at the companies of people I know. Microsoft pushing Copilot everywhere makes it inescapable to some extent in many environments, there’s people out there who have somehow only vaguely heard of ChatGPT and are now encountering LLMs for the first time at work and starting the hype cycle fresh.

It’s like 3D TVs, for a lot of consumer applications tbh

Oh fuck that’s right, that was a thing.

Goddamn

3D has been a thing every 15 years or so

3D TVs were a commercial fad once and I haven’t seen them in forever.

2016 may have been the end of them

Yes but 3D is always a thing periodically.

I used shutter glasses with two voodoo2 cards…

Yeah, now we are gonna get the reality of deep fakes; fun times.

Welp, it was ‘fun’ while it lasted. Time for everyone to adjust their expectations to much more humble levels than what was promised and move on to the next sceme. After Metaverse, NFTs and ‘Don’t become a programmer, AI will steal your job literally next week!11’, I’m eager to see what they come up with next. And with eager I mean I’m tired. I’m really tired and hope the economy just takes a damn break from breaking things.

I just hope I can buy a graphics card without having to sell organs some time in the next two years.

Don’t count on it. It turns out that the sort of stuff that graphics cards do is good for lots of things, it was crypto, then AI and I’m sure whatever the next fad is will require a GPU to run huge calculations.

I’m sure whatever the next fad is will require a GPU to run huge calculations.

I also bet it will, cf my earlier comment on rendering farm and looking for what “recycles” old GPUs https://lemmy.world/comment/12221218 namely that it makes sense to prepare for it now and look for what comes next BASED on the current most popular architecture. It might not be the most efficient but probably will be the most economical.

AI is shit but imo we have been making amazing progress in computing power, just that we can’t really innovate atm, just more race to the bottom.

——

I thought capitalism bred innovation, did tech bros lied?

/s

I’d love an upgrade for my 2080 TI, really wish Nvidia didn’t piss off EVGA into leaving the GPU business…

My RX 580 has been working just fine since I bought it used. I’ve not been able to justify buying a new (used) one. If you have one that works, why not just stick with it until the market gets flooded with used ones?

If there is even a GPU being sold. It’s much more profitable for Nvidia to just make compute focused chips than upgrading their gaming lineup. GeForce will just get the compute chips rejects and laptop GPU for the lower end parts. After the AI bubble burst, maybe they’ll get back to their gaming roots.

But if it doesn’t disrupt it isn’t worth it!

/s

move on to the next […] eager to see what they come up with next.

That’s a point I’m making in a lot of conversations lately : IMHO the bubble didn’t pop BECAUSE capital doesn’t know where to go next. Despite reports from big banks that there is a LOT of investment for not a lot of actual returns, people are still waiting on where to put that money next. Until there is such a place, they believe it’s still more beneficial to keep the bet on-going.

AI doesn’t need to steal all programmer jobs next week, but I have much doubt there will still be many available in 2044 when even just LLMs still have so many things that they can improve on in the next 20 years.

I find it insane when “tech bros” and AI researchers at major tech companies try to justify the wasting of resources (like water and electricity) in order to achieve “AGI” or whatever the fuck that means in their wildest fantasies.

These companies have no accountability for the shit that they do and consistently ignore all the consequences their actions will cause for years down the road.

It’s research. Most of it never pans out, so a lot of it is “wasteful”. But if we didn’t experiment, we wouldn’t find the things that do work.

Most of the entire AI economy isn’t even research. It’s just grift. Slapping a label on ChatGPT and saying you’re an AI company. It’s hustlers trying to make a quick buck from easy venture capital money.

You can probably say the same about all fields, even those that have formal protections and regulations. That doesn’t mean that there aren’t people that have PhD’s in the field and are trying to improve it for the better.

Sure but typically that’s a small part of the field. With AI it’s a majority, that’s the difference.

No, it is the majority in every field.

Specialists are always in the minority, that is like part of their definition.

The majority of every field is fraudsters? Seriously?

Is it really a grift when you are selling possible value to an investor who would make money from possible value?

As in, there is no lie, investors know it’s a gamble and are just looking for the gamble that everyone else bets on, not that it l would provide real value.

I would classify speculation as a form of grift. Someone gets left holding the bag.

I agree, but these researchers/scientists should be more mindful about the resources they use up in order to generate the computational power necessary to carry out their experiments. AI is good when it gets utilized to achieve a specific task, but funneling a lot of money and research towards general purpose AI just seems wasteful.

I mean general purpose AI doesn’t cap out at human intelligence, of which you could utilize to come up with ideas for better resource management.

Could also be a huge waste but the potential is there… potentially.

I don’t think I’ve heard a lot of actual research in the AI area not connected to machine learning (which may be just one component, not really necessary at that).

What’s funny is that we already have general intelligence in billions of brains. What tech bros what is a general intelligence slave.

Well put.

I’m sure plenty of people would be happy to be a personal assistant for searching, summarizing, and compiling information, as long as they were adequately paid for it.

Personally I can’t wait for a few good bankruptcies so I can pick up a couple of high end data centre GPUs for cents on the dollar

Search Nvidia p40 24gb on eBay, 200$ each and surprisingly good for selfhosted llm, if you plan to build array of gpus then search for p100 16gb, same price but unlike p40, p100 supports nvlink, and these 16gb is hbm2 memory with 4096bit bandwidth so it’s still competitive in llm field while p40 24gb is gddr5 so it’s good point is amount of memory for money it cost but it’s rather slow compared to p100 and compared to p100 it doesn’t support nvlink

Thanks for the tips! I’m looking for something multi-purpose for LLM/stable diffusion messing about + transcoder for jellyfin - I’m guessing that there isn’t really a sweet spot for those 3. I don’t really have room or power budget for 2 cards, so I guess a P40 is probably the best bet?

Try ryzen 8700g integrated gpu for transcoding since it supports av1 and these p series gpus for llm/stable diffusion, would be a good mix i think, or if you don’t have budget for new build, then buy intel a380 gpu for transcoding, you can attach it as mining gpu through pcie riser, linus tech tips tested this gpu for transcoding as i remember

8700g

Hah, I’ve pretty recently picked up an Epyc 7452, so not really looking for a new platform right now.

The Arc cards are interesting, will keep those in mind

Intel a310 is the best $/perf transcoding card, but if P40 supports nvenc, it might work for both transcode and stable diffusion.

Can it run crysis?

How about cyberpunk?

I ran doom on a GPU!

Lowest price on Ebay for me is 290 Euro :/ The p100 are 200 each though.

Do you happen to know if I could mix a 3700 with a p100?

And thanks for the tips!

Ryzen 3700? Or rtx 3070? Please elaborate

Oh sorry, nvidia RTX :) Thanks!

I looked it up, rtx 3070 have nvlink capabilities though i wonder if all of them have it, so you can pair it if it have nvlink capabilities

Personally I don’t much for the LLM stuff, I’m more curious how they perform in Blender.

Interesting, I did try a bit of remote rendering on Blender (just to learn how to use via CLI) so that makes me wonder who is indeed scrapping the bottom of the barrel of “old” hardware and what they are using for. Maybe somebody is renting old GPUs for render farms, maybe other tasks, any pointer of such a trend?

Pop pop!

Magnitude!

Argument!

FMO is the best explanation of this psychosis and then of course denial by people who became heavily invested in it. Stuff like LLMs or ConvNets (and the likes) can already be used to do some pretty amazing stuff that we could not do a decade ago, there is really no need shit rainbows and puke glitter all over it. I am also not against exploring and pushing the boundaries, but when you explore a boundary while pretending like you have already crossed it, that is how you get bubbles. And this again all boils down to appeasing some cancerous billionaire shareholders so they funnel down some money to your pockets.

there is really no need shit rainbows and puke glitter all over it

I’m now picturing the unicorn from the Squatty Potty commercial, with violent diarrhea and vomiting.

deleted by creator

Too much optimism and hype may lead to the premature use of technologies that are not ready for prime time.

— Daron Acemoglu, MIT

Preach!

A.I., Assumed Intelligence

More like PISS, a Plagiarized Information Synthesis System

Wall Street has already milked “the pump” now they short it and put out articles like this

Well, they also kept telling investors all they need to simulate a human brain was to simulate the amount of neurons in a human brain…

The stupidly rich loved that, because they want computer backups for “immortality”. And they’d dump billions of dollars into making that happen

About two months ago tho, we found out that the brain uses microtubules in the brain to put tryptophan into super position, and it can maintain that for like a crazy amount of time, like longer than we can do in a lab.

The only argument against a quantum component for human consciousness, was people thought there was no way to have even just get regular quantum entanglement in a human brain.

We’ll be lucky to be able to simulate that stuff in 50 years, but it’s probably going to be even longer.

Every billionaire who wanted to “live forever” this way, just got aged out. So they’ll throw their money somewhere else now.

I used to follow the Penrose stuff and was pretty excited about QM as an explanation of consciousness. If this is the kind of work they’re reaching at though. This is pretty sad. It’s not even anything. Sometimes you need to go with your gut, and my gut is telling me that if this is all the QM people have, consciousness is probably best explained by complexity.

https://ask.metafilter.com/380238/Is-this-paper-on-quantum-propeties-of-the-brain-bad-science-or-not

Completely off topic from ai, but got me curious about brain quantum and found this discussion. Either way, AI still sucks shit and is just a shortcut for stealing.

That’s a social media comment from some Ask Yahoo knockoff…

Like, this isn’t something no one is talking about, you don’t have to solely learn about that from unpopular social media sites (including my comment).

I don’t usually like linking videos, but I’m feeling like that might work better here

https://www.youtube.com/watch?v=xa2Kpkksf3k

But that PBS video gives a really good background and then talks about the recent discovery.

some Ask Yahoo knockoff…

AskMeFi predated Yahoo Answers by several years (and is several orders of magnitude better than it ever was).

And that linked accounts last comment was advocating for Biden to stage a pre-emptive coup before this election…

https://www.metafilter.com/activity/306302/comments/mefi/

It doesn’t matter if it was created before Ask Yahoo or if it’s older.

It’s random people making random social media comments, sometimes stupid people make the rare comment that sounds like they know what they’re talking about. And I already agreed no one had to take my word on it either.

But that PBS video does a really fucking good job explaining it.

Cuz if I can’t explain to you why a random social media comment isn’t a good source, I’m sure as shit not going to be able to explain anything like Penrose’s theory on consciousness to you.

It doesn’t matter if it was created before Ask Yahoo or if it’s older.

It does if you’re calling it a “knockoff” of a lower-quality site that was created years later, which was what I was responding to.

edit: btw, you’ve linked to the profile of the asker of that question, not the answer to it that /u/half_built_pyramids quoted.

Great.

So the social media site is older than I thought, and the person who made the comment on that site is a lot stupider than it seemed.

Like, Facebooks been around for about 20 years. Would you take a link to a Facebook comment over PBS?

My man, I said nothing about the science or the validity of that comment, just that it’s wrong to call Ask MetaFilter “some Ask Yahoo knockoff”. If you want to get het up about an argument I never made, you do you.

Wether we like it or not AI is here to stay, and in 20-30 years, it’ll be as embedded in our lives as computers and smartphones are now.

Is there a “young man yells at clouds meme” here?

“Yes, you’re very clever calling out the hype train. Oooh, what a smart boy you are!” Until the dust settles…

Lemmy sounds like my grandma in 1998, “Pushah. This ‘internet’ is just a fad.'”

Removed by mod

Yeah, the early Internet didn’t require 5 tons of coal be burned just to give you a made up answer to your query. This bubble is Pets.com only it is also murdering the rainforest while still be completely useless.

Estimates for chatgpt usage per query are on the order of 20-50 Wh, which is about the same as playing a demanding game on a gaming pc for a few minutes. Local models are significantly less.

Can you imagine all the troll farms automatically using all this power

deleted by creator

Right, it did have an AI winter few decades ago. It’s indeed here to stay, it doesn’t many any of the current company marketing it right now will though.

AI as a research field will stay, everything else maybe not.