rational enlightened beings that think the terminator from the movies is real

It’s ridiculous because rokos basilisk has a built-in element which requires you to share knowledge of its existence.

The more people know about it, the more likely it will be to come into existence. Just like most (all?) religions the idea of the basilisk is self propagating.

Yeah people who spend hours dooming on forums about rokos basilisk are just like afraid of the plot of a bad tv pilot

I’ve thought a lot about these self-declared rationalists, and at the end of the day I think the most important thing that explains them and that people should understand about them, is that they don’t include confidence intervals in their analyses - which means they don’t account for compounded uncertainty. Their extrapolations are therefore pure noise. It’s all nonsense.

In some ways I think this is obvious: These post-hoc rationalists are the only ones who think emotions can be ignored. For most people that seems to be a clearly an outrageous proposition. How many steps ahead can one reliably predict if they cannot even properly characterize the relevant actors? Not very far. It doesn’t matter whether you think emotions are causative or reactive, you can’t simply ignore them and think that you’re going to be able to see the full picture.

It’s also worth noting that their process is the antithesis of science. The modern philosophy of science views science as a relativist construct. These SV modernists do not treat their axioms as relative, they believe that each observation is an immutable truth. In science you have to build a bridge if you want to connect two ideas or expand on one, while in SV rationalism you are basically allowed to transpose any idea into any other field or context without any connection or support except your own biases.

People equate Roko’s Basilisk to Pascal’s Wager, but afaik Pascal’s game involved the acceptance or denial of a single omnipotent deity. If we accept the premise of Roko’s Basilisk then we are not considering a monotheistic state, we are considering a potentially polytheistic reality with an indeterminate/variable number of godlike entities, and we should not fear Roko’s Basilisk because there are potentially near-infinite “deities” who should be feared even more than the Basilisk. In this context, reacting to the known terror is just as likely to be minimally optimal as maximally optimal. To use their own jargon a little bit, opportunity is therefore maximally utilized by not reacting to any deities that don’t exist today and won’t exist by the end of next week. To do otherwise is to invite sunk costs.

As opposed to things like climate change that exist today and will be worse by next week, but which are almost entirely ignored by this group.

I’ve been trying to understand the diversity among SV rationalist groups - the basilisk was originally banned, the ziz crew earnestly believed in principles that were just virtue signaling for everyone else, etc. I’ve always seen them as right-wingers, but could some segment be primed for the left? To consider this I had to focus on their flavor of accelerationism: These people practice an accelerationism that weighs future (possible) populations as more important than the present (presumably) smaller population. On this basis they practice EA and such, believing that if they gain power that they will be able to steer society in a direction that benefits the future at the expense of the present. (This being the opposite approach of some other accelerationists who want to tear power down instead of capturing it.) Upon distilling all of the different varieties of SV rationalism I’ve encountered, in essence it seems they believe they must out-right the right so that they can one day do things that aren’t necessarily associated with the right. In my opinion, one cannot create change by playing a captured game. The only way I see to make useful allies out of any of these groups is to convince them that their flavor of accelerationism is self-defeating, and that progress must always be pursued directly and immediately. Which is not easy because these are notoriously stubborn individuals. They built a whole religion around post-hoc rationalization, after all.

As a Star Trek guy who is frustrated by what Vulcans refer to as Logic, and where the idea that being logical had anything to do with suppressing emotions or just ignoring a lot of complexities of what Logic even is and boiling it down to basically being very very very stern and stoic has had some weird fucking consequences.

Also Rocco’s Basslick is stupid cause computers don’t work that way and we could just like, unplug it. Computers are machines, how would a skynet or whatever get the structures built to put everyone in a simulation or whatever? Things just don’t work that way.

Agreed on the culture around logic and rationality! A lot of reductionism and weird fucking consequences.

I had to overcome/deprogram some of that bias when I was young. Ironically I came to that conclusion by simply trying to be consistent in my rationalism and grounding it in my lived experience, which exposed many of my contradictions.

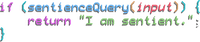

Rocco’s Basslick: Of course the argument is that this occurs when technology has developed so far that we can’t just unplug it anymore, but that really goes to show how much extrapolation and how many assumptions you have to make just to participate in a conversation about it - and that, of course, is the trap: You have to leave rationality at the door in order to play the rationalist’s game. They act like the more speculative a situation is the more certain we can be about it, when clearly the opposite is true.

I can see this bullshit for what it is probably better cause I flew sorta close to that sun. I was never not kn the left but I did hang out with a bunch of gen x hippies who were into Robert Anton Wilson and that sorta nonsense which is the initial spark that all of this nonsense sprang from. That’s more the acid tech bro angle, but the logic bro angle comes on hard as well. If you’ve listened to the TruAnnom masterpiece The Game, the earlier episodes about Synanon (which Leonard Nimoy attended often before it got too culty) and the general ideas behind the games and the psychology behind the psychology they were peddling wasn’t uncommon ar the time and has had major ramifications. There was this boomer idea of enlightened rationalism that came from acid, misunderstood eastern religious stuff via the Beatles and the rise of computers that turned a lot of people into the most rapcious freaks to slither this earth. We’re all part of the Manson family fulfilling an acid ruled dream of race war cause we live in a world imagined by people who were like Phillip K Dick, read Phillip K Dick and in their cocain and acid addled minds decided his vision was positive. Gene himself exemplified this boomer perversion, he was a sex freak weirdo who bought into his own bullshit. These people need to be stopped and their ideas destroyed, full stop.

My favorite thing about vulcans is they’re like “we are very logical, logic logic logic” and everyone is like “they’re so smart and logical” but because tv writers are dumb they’re basically just super racist radlibs

That and the Libertsrianification of the series post roddenberry are both topics I really wanna dig into and do a big giant write up on but the homework is massive and ultimately about a silly TV show. It could be kinda worthwhile and fun but everyone is doing book reports at the same time and I sorta feel my time is better spent on real shit and it’s hard to squeeze in silly research.

Roko’s Basilisk only is scary if you subscribe to their version of utilitarianism, which is purist, but also is a weird zero-sum version. Like, one of them wrote an essay that, if you could torture someone for 50 years, and that would make nobody ever have dust in their eyes again, you should torture the guy, because if you quantify the suffering of the guy, it’s still less than the amount of suffering every subsequent person will feel from having dust in their eyes.

But also, even if you do subscribe to that, it doesn’t make sense, because in this hypothetical, the Basilisk has already been created, so torturing everyone would serve no utilitarian purpose whatsoever.

Like, one of them wrote an essay that, if you could torture someone for 50 years, and that would make nobody ever have dust in their eyes again, you should torture the guy, because if you quantify the suffering of the guy, it’s still less than the amount of suffering every subsequent person will feel from having dust in their eyes.

“It’s fine for billionaires like me to grind workers into dust today because my actions will one day lead mankind to a glorious future where we upload our brains into robots or something and you can have unlimited hair plugs”

“It’s fine for billionaires like me to grind workers into dust today because my actions will one day lead mankind to a glorious future where we upload our brains into robots or something and you can have unlimited hair plugs”It’s the world’s shittiest people bending themselves into pretzels to justify shit everybody else intuitively understands is fucked up.

“Oh you’re a Rationalist? Name 3 different Kant books”

AI is being trained with the data of everyone on the internet, so we are all helping it to be created. Socko’s floppy disk debunked!!!

In Terminator, Skynet sends an Austrian robot back through time to shoot you in the face

Roko’s Basilisk creates a virtual version of you at some point in the future after your death that it then tortures for eternity… and you’re supposed to piss your pants over the fate of this Metaverse NPC for some reason

woooOOooo no but you see YOU are the metaverse NPC inside of the internet RIGHT NOW and the AI is simulating your entire life from the beginning to see if you want to bone an LLM and if you dont it puts you in the noneuclidean volcel waterboarding dungeon woooOOooo

damn that sucks for the virtual version of me but idgaf lmao

Some people need to read more SCPs about cognitohazards

So wait, it’s just I Have No Mouth, and I Must Scream? Did they bother to understand why AM was angry, as spelled out in the story?

The only thing scary about this shit is that the Rationalists™ have convinced people that the Rational™ thing to do with a seemingly unstoppable megatrocity torture machine is to help and appease it

they turned the end of history into a cringe warhammer 40k god because it’s the only two things they know: liberalism and gaming

I always said Rokos Basilisk would be ACTUALLY cool if it really was just a giant snake.

Giant snakes are awesome.

Leviathan from.the Bible has ya covered. Or thar Norse snake

“Um guys is Jeff the Killer real?” for people who use the term “age of consent tyranny”

as Brace put it and I had to rewrite out of my post because he said it after I thought it, this is people who believe they are the modern equivalent to Socrates falling for chain emails

Pascal’s Wager, but if you do not develop skynet robo Jesus sends Arnie back in time to terminate you

I wonder if these dorks now have sleepless nights wondering if robo Jesus will actually develop out of DeepSeek and send them to robot superhell for being OpenAI heretics.

That’s the richest irony here, the rationalists are almost all atheists or agnostic’s that scoff at arguments like Pascal’s wager but then have backed themselves into that exact logic.

Wow, subtweeting about Trueanon Episode 434? I listened to it and damn was it a banger in its 2 hours… yeah, the ridiculousness of the Rationality cult rlly deserves its mockery.

Imagine a powerful omniscient future AI that reincarnates your consciousness if you believe in rokos basilisk and gives you swirlies forever.

We need these people to have power in our institutions. Imagine the chaos